8

Auditory Prostheses

From the Lab to the

Clinic and Back Again

- 8.1 Hearing Aid Devices Past and Present

- 8.2 The Basic Layout of Cochlear Implants

- 8.3 Place Coding with Cochlear Implants

- 8.4 Speech Processing Strategies Used in Cochlear Implants

- 8.5 Pitch and Music Perception Through Cochlear Implants

- 8.6 Spatial Hearing with Cochlear Implants

- 8.6 Brain Plasticity and Cochlear Implantation

Hearing research is

one of the great scientific success stories of the last 100 years. Not only have

so many interesting discoveries been made about the nature of sound and the

workings of the ear and the auditory brain, but these discoveries have also

informed many immensely useful technological developments in entertainment and

telecommunications as well as for clinical applications designed to help

patients with hearing impairment.

Having valiantly worked your way

through the previous chapters of the book, you will have come to appreciate

that hearing is a rather intricate and subtle phenomenon. And one sad fact

about hearing is that, sooner or later, it will start to go wrong in each and

every one of us. The workings of the middle and inner ears are so delicate and

fragile that they are easily damaged by disease or noise trauma or other

injuries, and even those who look after their ears carefully cannot reasonably

expect them to stay in perfect working order for over 80 years or longer. The

consequences of hearing impairment can be tragic. No longer able to follow

conversations with ease, hearing impaired individuals can all too easily become

deprived of precious social interaction, stimulating conversation, and the joy

of listening to music. Also, repairing the auditory system when it goes wrong

is not a trivial undertaking, and many early attempts at restoring lost auditory

function yielded disappointing results. But recent advances have led to

technologies capable of transforming the lives of hundreds of thousands of deaf

and hearing impaired individuals. Improved hearing aid and cochlear implant

designs now enable many previously profoundly deaf people to pick up the phone

and call a friend.

And just as basic science has been

immensely helpful in informing design choices for such devices, the successes,

limitations, or failures of various designs are, in turn, scientifically

interesting, as they help to confirm or disprove our notions of how the

auditory system operates. We end this book with a brief chapter on hearing aids

and cochlear implants in particular. This chapter is not intended to provide a

systematic or comprehensive guide to available devices, or to the procedures

for selecting or fitting them. Specialized audiology texts are available for

that purpose. The aim of this chapter is rather to present a selection of

materials chosen to illustrate how the fundamental science that we introduced

in the previous chapters relates to practical and clinical applications.

8.1 Hearing Aid Devices Past and Present

As we mentioned in

chapter 2, by far the most common cause of hearing loss is damage to cochlear

hair cells, and in particular to outer hair cells whose purpose seems to be to

provide a mechanical amplification of incoming sounds. Now, if problems stem

from damage to the ear’s mechanical amplifier, it would make sense to try to

remedy the situation by providing alternative means of amplification. Hearing

loss can also result from pathologies of the middle ear, such as otosclerosis, a disease in which slow, progressive bone

growth on the middle ear ossicles reduces the

efficiency with which airborne sounds are transmitted to the inner ear. In such

cases of conductive hearing loss, amplification of the incoming sound can also be

beneficial.

The simplest and oldest hearing

aid devices sought to amplify the sound that enters the ear canal by purely

mechanical means. So-called ear trumpets were relatively widely used in the

1800s. They funneled collected sound waves down a narrowing tube to the ear

canal. In addition to providing amplification by collecting sound over a large

area, they had the advantage of fairly directional acoustic properties, making

it possible to collect sound mostly from the direction of the sound source of

interest.

Ear trumpets do, of course, have

many drawbacks. Not only are they fairly bulky, awkward, and technologically

rather limited, many users would also be concerned about the “cosmetic side

effects.” Already in the 1800s, many users of hearing aids were concerned that

these highly conspicuous devices might not exactly project a very youthful and

dynamic image. Developing hearing aids that could be hidden from view therefore

has a long history. King John VI of

Figure 8.1

A

chair with in-built ear trumpet, which belonged to King John VI of

The design of King John VI’s chair is certainly ingenious, but not very practical.

Requiring anyone who wishes to speak with you to kneel and address you through

the jaws of your carved lion might be fun for an hour or so, but few

psychologically well-balanced individuals would choose to hold the majority of

their conversations in that manner. It is also uncertain whether the hearing

aid chair worked all that well for King John VI. His reign was beset by

intrigue, both his sons rebelled against him, and he ultimately died from

arsenic poisoning. Thus, it would seem that the lion’s jaws failed to pick up

on many important pieces of court gossip.

Modern hearing aids are thankfully

altogether more portable, and they now seek to overcome their cosmetic

shortcomings not by intricate carving, but through miniaturization, so that

they can be largely or entirely concealed behind the pinna

or in the ear canal. And those are not the only technical advances that have

made modern devices much more useful. A key issue in hearing aid design is the

need to match and adapt the artificial amplification provided by the device to

the specific needs and deficits of the user. This is difficult to do with

simple, passive devices such as ear trumpets. In cases of conductive hearing

loss, all frequencies tend to be affected more or less equally, and simply

boosting all incoming sounds can be helpful. But in the countless patients with

sensorineural hearing loss due to outer hair cell

damage, different frequency ranges tend to be affected to varying extents. It

is often the case that sensorineural hearing loss

affects mostly high frequencies. If the patient is supplied with a device that

amplifies all frequencies indiscriminately, then such a device would most

likely overstimulate the patient’s still sensitive

low-frequency hearing before it amplifies the higher frequencies enough to

bring any benefit. The effect of such a device would be to turn barely

comprehensible speech into unpleasantly loud booming noises. It would not make

speech clearer.

You may also remember from section

2.3 that the amplification provided by the outer hair cells is highly nonlinear

and “compressive,” that is, the healthy cochlea amplifies very quiet sounds

much more than moderately loud ones, which gives the healthy ear a very wide

“dynamic range,” allowing it to process sounds over an enormous amplitude range.

If this nonlinear biological amplification is replaced by an artificial device

that provides simple linear amplification, users often find environmental

sounds transition rather rapidly from barely audible to uncomfortably loud.

Adjusting such devices to provide a comfortable level of loudness can be a

constant struggle. To address this, modern electronic hearing aids designed for

patients with sensorineural hearing loss offer nonlinear

compressive amplification and “dynamic gain control.”

Thus, in recent years, hearing aid

technology has become impressively sophisticated, and may incorporate highly

directional microphones, as well as digital signal processing algorithms that transpose

frequency bands, allowing information in the high-frequency channels in which

the patient has a deficit to be presented to the still intact low-frequency

part of the cochlea. And for patients who cannot receive the suitably

amplified, filtered, and transposed sound through the ear canal, perhaps

because of chronic or recurrent infections, there are even bone-anchored

devices that deliver the sound as mechanical vibration of a titanium plate

embedded in the skull, or directly vibrate the middle ear ossicles

by means of a small transducer system implanted directly in the middle ear.

With such a wide variety of

technologies available, modern hearing aid devices can often bring great

benefit to patients, but only if they are carefully chosen and adjusted to fit

each patient’s particular needs. Otherwise they tend to end up in a drawer,

gathering dust. In fact, this still seems to be the depressingly common fate of

many badly fitted hearing aids. It has been estimated (Kulkarni

& Hartley, 2008) that, of the 2 million hearing aids owned by hearing

impaired individuals in the UK in 2008, as many as 750,000, more than one

third, are not being used on a regular basis, presumably because they fail to

meet the patient’s needs. Also, a further 4 million hearing impaired

individuals in the

To work at their best, hearing

aids should squeeze as much useful acoustic information as possible into the

reduced frequency and dynamic range that remains available to the patient. As

we shall see in the following sections, the challenges for cochlear implant

technology are similar, but tougher, as cochlear implants have so far been used

predominantly in patients with severe or profound hearing loss (thresholds

above 75 dB SPL) over almost the entire frequency range, so very little normal

functional hearing is left to work with.

8.2 The Basic Layout of Cochlear Implants

Cochlear implants are

provided to the many patients who are severely deaf due to extensive damage to

their hair cells. Severe hearing loss caused by middle ear disease can often be

remedied surgically. Damaged tympanic membranes can be repaired with skin

grafts, and calcified ossicles can be trimmed or

replaced. But at present there is no method for regenerating or repairing

damaged or lost sensory hair cells in the mammalian ear. And when hair cells

are lost, the auditory nerve fibers that normally connect to them are

themselves at risk and may start to degenerate. It seems that auditory nerve

fibers need to be in contact with hair cells to stay in the best of health. But

while this anterograde degeneration of denervated auditory nerve fibers is well documented, and

can even lead to cell death and shrinkage in the cochlear nuclei, it is a very

slow process and rarely leads to a complete degeneration of the auditory

afferents. Also, a patient’s hearing loss is often attributable to outer hair

cell damage. Without the amplification provided by these cells, the remaining

inner hair cells are incapable of providing sensitive hearing, but they

nevertheless survive and can exercise their beneficial trophic

influences on the many type I auditory nerve fibers that contact them.

Consequently, even after many years of profound deafness, most hearing impaired

patients still retain many thousand auditory nerve fibers, waiting for auditory

input. Cochlear implants are, in essence, simple arrays of wires that stimulate

these nerve fibers directly with pulses of electrical current.

Of course, the electrical

stimulation of the auditory nerve needs to be as targeted and specific as one

can make it. Other cranial nerves, such as the facial nerve, run close to the

auditory branch of the vestibulocochlear nerve, and

it would be unfortunate if electrical stimulus pulses delivered down the

electrodes, rather than evoking auditory sensations, merely caused the

patient’s face to twitch. To achieve highly targeted stimulation with an

extracellular electrode, it is necessary to bring the electrode contacts into

close proximity to the targeted auditory nerves. And to deliver lasting

benefits to the patient, they need to stay there, for many years. At present,

practically all cochlear implant devices in clinical use achieve this by

inserting the electrode contacts into the canals of the cochlea.

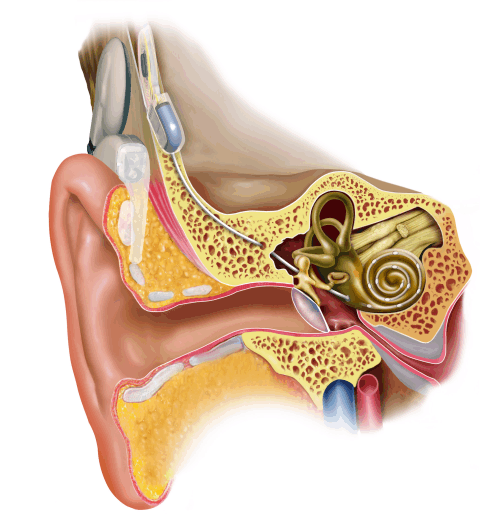

Figure 8.2

A

modern cochlear implant. Image kindly provided by

Figure 8.2 shows the layout of a typical

modern cochlear implant. The intracochlear electrode

is made of a plastic sheath fitted with electrode contacts. It is threaded into

the cochlea, either through the round window or through a small hole drilled

into the bony shell of the cochlea just next to the round window. The electrode

receives its electrical signals from a receiver device, which is implanted

under the scalp, on the surface of the skull, somewhat above and behind the

outer ear. This subcutaneous device in turn receives its signals and its

electrical energy via an induction coil from a headpiece radio transmitter.

Small, strong magnets fitted into the subcutaneous receiver and the transmitter

coil hold the latter in place on the patient’s scalp. The transmitter coil in

turn is connected via a short cable to a “speech processor,” which is usually

fitted behind the external ear. This speech processor collects sounds from the

environment through a microphone and encodes them into appropriate electrical

signals to send to the subcutaneous receiver. It also supplies the whole

circuitry with electrical power from a battery. We will have a lot more to say

about the signal processing that occurs in the speech processor in just a

moment, but first let us look in a bit more detail at how the intracochlear electrode is meant to interface with the

structures of the inner ear.

When implanting the device, the

surgeon threads the electrode through a small opening at or near the round

window, up along the scala tympani, so as to place

the electrode contacts as close as possible to the modiolus

(the center of the cochlear helix). Getting the electrode to “hug the modiolus” is thought to have two advantages: First, it

reduces the risk that the electrode might accidentally scratch the stria vascularis that runs along

the opposite external wall of the cochlear coil, and could bleed easily and

cause unnecessary trauma. Second, it is advantageous to position the electrode

contacts very close to the AN fibers, whose cell bodies, the spiral ganglion

cells, live in a cavity inside the modiolus known as

Rosenthal’s canal.

Inserting an electrode into the

cochlea is a delicate business. For example, it is thought to be important to

avoid pushing the electrode tip through the basilar membrane and into the scala media or scala vestibuli, as that could damage AN

fiber axons running into the organ of Corti. And, it

is also thought that electrode contacts that are pushed through into the scala media or scala vestibuli are much less efficient at stimulating AN fibers than those that sit on the modiolar

wall of the scala tympani. You may recall that the

normal human cochlea helix winds through two and a half turns. Threading an

electrode array from the round window through two and a half turns all the way

up to the cochlear apex is not possible at present. Electrode insertions that cover

the first, basal-most one to one and a quarter turns are usually as much as can

reasonably be achieved. Thus, after even a highly successful CI operation, the

electrode array will not cover the whole of the cochlea’s tonotopic

range, as there will be no contacts placed along much of the apical, low-frequency

end.

Through its electrode contacts,

the cochlear implant then aims to trigger patterns of activity in the auditory

nerve fibers, which resemble, as much as possible, the activity that would be

set up by the synaptic inputs from the hair cells if the organ of Corti on the basilar membrane was functional. What such normal

patterns of activity should look like we have discussed in some detail in

chapter 2. You may recall that, in addition to the tonotopic

place code for sound frequency and the spike rate coding for sound intensity, a

great deal of information about a sound’s temporal structure is conveyed

through phase locked temporal discharge patterns of auditory nerve fibers. This

spike pattern information encodes the sound’s amplitude envelope, its

periodicity, and even the submillisecond timing of

features that we rely on to extract interaural time differences for spatial

hearing. Sadly, the intracochlear electrode array

cannot hope to reproduce the full richness of information that is encoded by a

healthy organ of Corti. There are quite serious

limitations of what is achievable with current technology, and many compromises

must be made. Let us first consider the place coding issue.

8.3 Place Coding with Cochlear Implants

If the electrodes can

only cover the first of the two and a half turns of the cochlea, then you might

expect that less than half of the normal tonotopic

range is covered by the electrode array. Indeed, if you look back at figure 2.3,

which illustrated the tonotopy of the normal human basilar membrane, you will

see that the first, basal-most turn of the human cochlea covers the

high-frequency end, from about 20 to 1.2 kHz or so. The parts of

the basilar membrane that are most sensitive to

frequencies lower than that are beyond the reach of current cochlea implant

designs. This high-frequency bias could be problematic. You may recall that the

formants of human speech, which carry much of the phonetic information, all

evolve at relatively low frequencies, often as low as just a few hundred hertz.

Poor coverage of the low-frequency

end is an important limitation for cochlear implants, but it is not as big a

problem as it may seem at first glance, for two reasons. First, Rosenthal’s

canal, the home of the cell bodies of the auditory nerve fibers, runs alongside

the basilar membrane for only about three quarters of its length, and does not

extend far into the basilar membrane’s most apical turn. The spiral ganglion

cells that connect to the low-frequency apical end of the basilar membrane

cover the last little stretch with axons that fan out over the last turn,

rather than positioning themselves next to the apical points on the basilar

membrane they innervate (Kawano, Seldon, & Clark,

1996). Consequently, there is a kind of anatomical compression of the tonotopy

of the spiral ganglion relative to that of the organ of Corti,

and an electrode array that runs along the basilar membrane for 40% of its

length from the basal end may nevertheless come into close contact with over

60% of spiral ganglion cells. Second, it seems that our auditory system can

learn to understand speech fairly well even if formant contours are shifted up

in frequency. In fact, implantees who had previous

experience of normal hearing often describe the voices they hear through the

implants as “squeaky” or “Mickey Mouse-like” compared to the voices they used

to experience, but they can nevertheless understand the speech well enough to

hold a telephone conversation.

Trying to get cochlear implants to

cover a wide range of the inner ear’s tonotopy is one issue. Another technical

challenge is getting individual electrode contacts to target auditory nerve

fibers so that small parts of the tonotopic array can

be activated selectively. It turns out that electrical stimuli delivered at one

point along the electrode array tend to spread sideways and may activate not

just one or a few, but many neighboring frequency channels. This limits the

frequency resolution that can be achieved with cochlear implants, and

constitutes another major bottleneck of this technology.

Obviously, the number of separate frequency

channels that a cochlear implant delivers can never be greater than the number

of independent electrode contacts that can be fitted on the implant. The first

ever cochlear implants fitted to human patients in the 1950s had just a single

channel (Djourno & Eyries,

1957), and were intended to provide a lip-reading aid and generate a basic

sound awareness. They were certainly not good enough to allow the implantees to understand speech. At the time of writing,

the number of channels in implants varies with the manufacturer, but is usually

less than twenty five. This is a very small number given that the device is

aimed to replace tonotopically organized input from

over 3,000 separate inner hair cells in the normal human cochlea. You might

therefore think that it would be desirable to increase this number further.

However, simply packing greater

and greater numbers of independent contacts onto the device is not enough. Bear

in mind that the electrode contacts effectively float in the perilymphatic fluid that fills the scala

tympani, and any voltage applied to the contacts will provoke current flows that

may spread out in all directions, and affect auditory nerve fibers further

afield almost as much as those in the immediate vicinity. There is little point

in developing electrodes with countless separate contacts if the “cross talk”

between these contacts is very high and the electrodes cannot deliver

independent information because each electrode stimulates very large, and

largely overlapping, populations of auditory nerve fibers. Somehow the

stimulating effect of each electrode contact must be kept local.

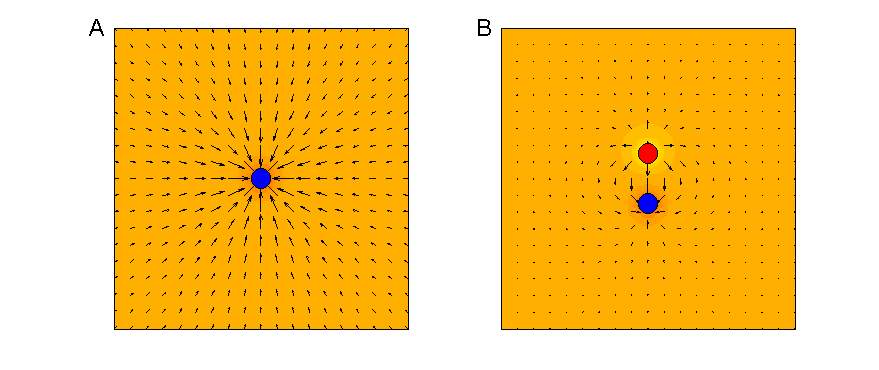

One strategy that aims to achieve

this is the use of so-called bipolar, or sometimes even tripolar,

rather than simple monopolar electrode

configurations. A bipolar configuration, rather than delivering voltage pulses

to each electrode contact independently, delivers voltages pulses of opposite

polarity in pairs of neighboring electrode site. Why would that be

advantageous? An electrode influences neurons in its vicinity by virtue of the

electric field it generates when a charge is applied to it (compare figure

8.3). The field will cause any charged particles in the

vicinity, such as sodium ions in the surrounding tissue, to feel forces of

electrostatic attraction toward or repulsion away from the electrode. These

forces will cause the charges to move, thus setting up electric currents, which

in turn may depolarize the membranes of neurons in the vicinity to the point

where these neurons fire action potentials. The density of the induced currents

will be proportional to the voltage applied, but will fall off with distance

from the electrode according to an inverse square law. Consequently, monopolar electrodes will excite nearby neurons more easily

than neurons that are further away, and while this decrease with distance is

initially dramatic, it does “level off” to an extent at greater distances. This

is illustrated in figure 8.3A.

The little arrows point in the direction of the electric force field, and their length is proportional to the logarithm of

the size of the electric forces available to drive currents at each point.

Figure 8.3

Electric fields

created by monopolar (A) and bipolar (B) electrodes.

In a bipolar electrode arrangement,

as illustrated in figure 8.3 B, equal and opposite

charges are present at a pair of electrode contacts in close vicinity. Each of

these electrodes produces its own electrostatic field, which conforms to the

inverse square law, and a nearby charged particle will be attracted to one and

repelled by the other electrode in the pair. However, seen from points a little

further afield, the two electrodes with their opposite charges may seem to lie

“more or less equally far away,” and in “more or less the same direction,” and

the attractive and repulsive forces exercised by the two electrodes will

therefore cancel each other at these more distant points. Over distances that are

large compared to the separation of the electrodes, the field generated by a

bipolar electrode therefore declines much faster than one generated by a monopolar electrode. (Note that the electric field arrows

around the edges of figure 8.3B

are shorter than those in A.) In theory, it should therefore be possible to

keep the action of cochlear implant electrodes more localized when the

electrode contacts are used in pairs to produce bipolar stimulation, rather

than driving each electrode individually.

We say “in theory,” because

experimental evidence suggests that, in practice, the advantage bipolar

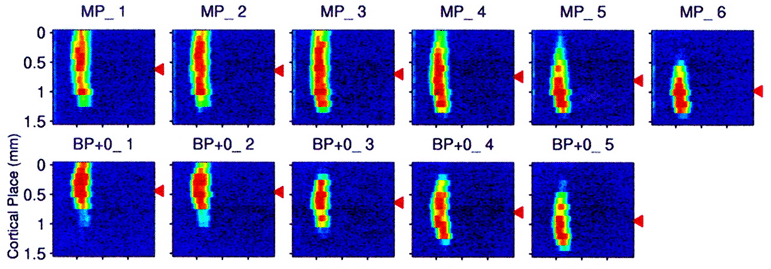

electrode arrangements offer tends to be modest. For example, Bierer and Middlebrooks (2002)

examined the activation of cortical neurons achieved in a guinea pig that had

received a scaled-down version of a human cochlear implant. The implant had six

distinct stimulating electrode sites, and brief current pulses were delivered,

either in a monopolar configuration, each site being

activated in isolation, or in a bipolar mode, with pairs of adjacent electrodes

being driven in opposite polarities. Cortical responses to the electrical

stimuli were recorded through an array of sixteen recording electrodes placed

along a 1.5-mm-long stretch of the guinea pig cortex, a stretch that covers the

representation of about two to three octaves of the tonotopic

axis in this cortical field. Figure

8.4 shows a representative example of the data they

obtained.

Figure 8.4

Activation

of guinea pig auditory cortex in response to stimulation at five different sites

on a cochlear implant with either monopolar (MP) or

bipolar (BP) electrode configuration.

Adapted from figure 4 of Bierer and Middlebrooks (2002) J Neurophysiol 87:478-492, with permission from The American

Physiological Society..

The gray scale shows the

normalized spike rate observed at the cortical location, shown on the y-axis at

the time following stimulus onset on the x-axis. Cortical neurons responded to

the electrical stimulation of the cochlea with a brief burst of nerve impulses.

And while any one stimulating electrode caused a response over a relatively

wide stretch of cortical tissue, the “center of gravity” of the neural activity

pattern (shown by the black arrow heads) nevertheless shifts systematically as

a function of the site of cortical stimulation. The activation achieved in

bipolar mode is somewhat more focal than that seen

with monopolar stimulation, but the differences are

not dramatic.

Experiments testing the level of

speech comprehension that can be achieved by implanted patients also fail to

show substantial and consistent advantages of bipolar stimulation (

Of course, for electrodes inserted

into the scala tympani, the partition wall between

the scala tympani and Rosenthal’s canal sets absolute

limits on how close the contacts can get to the neurons they are meant to

stimulate, and this in turn limits the number of distinct channels of acoustic

information that can be delivered through a cochlear implant. If electrodes

could be implanted in the modiolus or the auditory

nerve trunk to contact the auditory nerve fibers directly, this might increase

the number of well-separated channels that could be achieved, and possible

designs are being tested in animal experiments (Middlebrooks

& Snyder, 2007). But the surgery involved in implanting these devices is

somewhat riskier, and whether such devices would work well continuously for

decades is at present uncertain. Also, in electrode arrays that target the

auditory nerve directly, working out the tonotopic

order of the implanted electrodes is less straightforward.

With any stimulating electrode,

larger voltages will cause stronger currents, and the radius over which neurons

are excited by the electrode will grow accordingly. The duration of a current

pulse also plays a role, as small currents may be sufficient to depolarize a

neuron’s cell membrane to threshold provided that they are applied for long

enough. To keep the effect of a cochlear implant electrode localized to a small

set of spiral ganglion cells, one would therefore keep the stimulating currents

weak and brief, but that is not always possible because cochlear implants

signal changes in sound level by increasing or decreasing stimulus current, and

implantees perceive larger currents (or longer

current pulses) as “louder” (McKay, 2004; Wilson, 2004). In fact, quite modest

increases in stimulus current typically evoke substantially louder sensations.

In normal hearing, barely audible

sounds would typically be approximately 90 dB weaker than sounds that might be

considered uncomfortably loud. In cochlear implants, in contrast, electrical

stimuli grow from barely audible to uncomfortably loud if the current amplitude

increases by only about 10 dB or so (McKay, 2004; Niparko,

2004). One of the main factors contributing to these differences between

natural and electrical hearing is, of course, that the dynamic range

compression achieved by the nonlinear amplification of sounds through the outer

hair cells in normal hearing, which we discussed in section 2.3, is absent in

direct electrical stimulation. Cochlear implants, just like many digital

hearing aids, must therefore map sound amplitude onto stimulus amplitude in a

highly nonlinear fashion.

Stronger stimulating currents,

which signal louder sounds, will, of course, not just drive nearby nerve fibers

more strongly, but also start to recruit nerve fibers increasingly further

afield. In other words, louder sounds may mean poorer channel separation. To an

extent this is a natural state of affairs, since, as we have seen in section

2.4, louder sounds also produce suprathreshold

activation over larger stretches of the tonotopic

array in natural hearing. However, we have also seen that, in natural hearing,

temporal information provided through phase locking may help disambiguate place-coded

frequency information at high sound levels (compare, for example, sections 2.4

and 4.3). In this manner, phase-locked activity with an underlying 500-Hz

rhythm in a nerve fiber with a characteristic frequency of 800 Hz would be

indicative of a loud 500-Hz tone. As we discuss further below, contemporary

cochlear implants are sadly not capable of setting up similar temporal fine

structure codes.

A lateral spread of activation

with higher stimulus intensities could become particularly problematic if

several electrode channels are active simultaneously. As we have just seen in

discussing bipolar electrodes, cancellation of fields from nearby electrode

channels can be advantageous (even if the benefits are in practice apparently not

very large). Conversely, it has been argued that “vector addition” of fields

generated by neighboring electrodes with the same polarity could be deleterious

(McKay, 2004; Wilson & Dorman, 2009). Consequently, a number of currently

available speech processors for cochlear implants are set up to avoid such

potentially problematic channel interaction by never firing more than one

electrode at a time. You may wonder how that can be done; after all, many

sounds we hear are characterized by their distribution of acoustic energy across

several frequency bands. So how is it possible to convey fairly complex

acoustic spectra, such as the multiple formant peaks of a vowel, without ever firing

more than one channel at a time? Clearly, this involves some trickery and some

compromises, as we shall see in the next sections, where we consider the

encoding of speech, pitch, and sound source location through cochlear implants.

8.4 Speech Processing Strategies Used in Cochlear Implants

You will recall from

chapter 4 that, at least for English and most other Indo-European languages,

semantic meaning in speech is carried mostly by the time-varying pattern of

formant transitions, which are manifest as temporal modulations of between 1

and 7 Hz and spectral modulations of less than 4 cycles/kHz.

Consequently, to make speech comprehensible, neither the spectral nor the temporal

resolutions need to be very high. Given the technical difficulties involved in

delivering a large number of well-separated spectral channels through a

cochlear implant, this is a distinct advantage. In fact, a paper by Bob Shannon

and colleagues (1995) described a nice demonstration that as few as four

suitably chosen frequency channels can be sufficient to achieve good speech

comprehension. This was done by using a signal-processing technique known as

“noise vocoding,” which bears some similarity to the

manner in which speech signals are processed for cochlear implants. Thus, when

clinicians or scientists wish to give normally hearing individuals an idea of

what the world would sound like through a cochlear implant, they usually use

noise vocoded speech for these demonstrations. You

can find examples of noise vocoded sounds on the Web

site that accompanies this book <flag>. Further work by Dorman, Loizou, and Rainey (1997) has extended

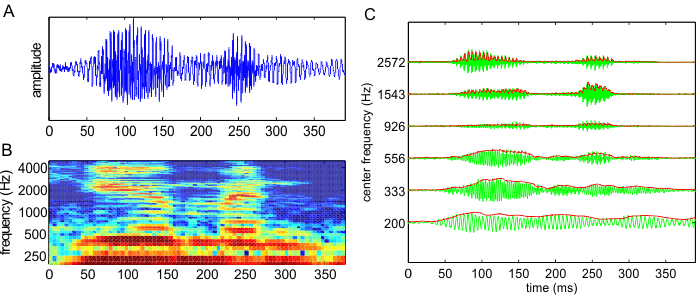

The first step in noise vocoding, as well as in speech processing for cochlear

implants, is to pass the recorded sound through a series of bandpass

filters. This process is fundamentally similar to the gamma-tone filtering we

described in sections 1.5 and 2.4 as a method for modeling the function of the

basilar membrane, only speech processors and noise vocoders

tend to use fewer, more broadly tuned, nonoverlapping

filters. Figure 8.5C

illustrates the output of such a filter bank, comprising six bandpass filters, in response to the acoustic waveform shown

in figure 8.5A

and B.

Figure 8.5

(A) Waveform of the

word “human” spoken by a native American speaker. (B)

Spectrogram of the same word. (C) Gray lines: Output of a set of six bandpass filters in response to the same word. The filter

spacing and bandwidth in this example are two-thirds of an octave. The center

frequencies are shown in the y-axis. Black lines: amplitude envelopes of the

filter outputs, as estimated with half-wave rectification and bandpass filtering.

Bandpass filtering similar to that shown

in figure 8.5 is the first step in the

signal processing for all cochlear implant speech processors, but processors

differ in what they then do with the output of the filters. One of the simplest

processing strategies, referred to as the simultaneous

analog signal (SAS) strategy, uses the filter outputs more or less directly

as the signal that is fed to the stimulating electrodes. The filter outputs

(shown by the gray lines in figure 8.5C)

are merely scaled to convert them into alternating currents of an amplitude

range that is appropriate for the particular electrode site they are sent to.

The appropriate amplitude range is usually determined when the devices are

fitted, simply by delivering a range of amplitudes to each site and asking the

patient to indicate when the signal becomes uncomfortably loud. A close cousin

of the SAS strategy, known as compressive

analog (CA), differs from SAS only in the details of the amplitude scaling

step.

Given that speech comprehension

usually requires only modest levels of spectral resolution, SAS and CA speech

processing can support good speech comprehension even though these strategies

make no attempt to counteract potentially problematic channel interactions. But

speech comprehension with SAS declines dramatically if there is much background

noise, particularly if the noise is generated by other people speaking in the

background, and it was thought that more refined strategies that might achieve

a better separation of a larger number of channels might be beneficial. The

first strategy to work toward this goal is known as continuous interleaved sampling (CIS). A CIS device sends a train

of continuous pulses to each of the electrode channels (

Note, by the way, that the pulses

used in CIS, and indeed in all pulsatile stimulation

for cochlear implants, are “biphasic,” meaning that each current pulse is

always followed by a pulse of opposite polarity, so that, averaged over time,

the net charge outflow out of the electrodes is zero. This is done because,

over the long term, net currents flowing from or into the electrodes can have

undesirable electrochemical consequences, and provoke corrosion of the

electrode channels or toxic reactions in the tissue.

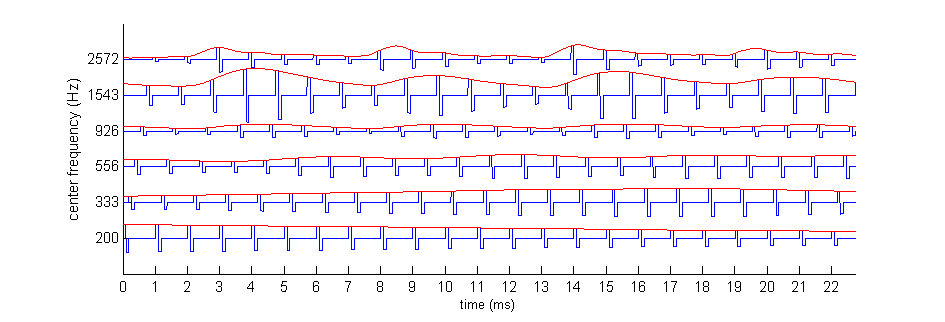

Figure 8.6

The

continuous interleaved sampling (CIS) speech coding strategy. Amplitude envelopes from the

output of a bank of bandpass filters (black dashed

lines; compare figure 8.5C) are used to modulate the

amplitude of regular biphasic pulse trains (shown in gray). The pulses for

different frequency bands are offset in time so that no two pulses are on at

the same time. The modulated pulses are then delivered to the cochlear implant

electrodes.

Because in CIS, no two electrode

channels are ever on simultaneously, there is no possibility of unwanted

interactions of fields from neighboring channels. The basic CIS strategy

delivers pulse trains, like those shown in figure 8.6,

to each of the electrode contacts in the implant. A number of implants now have

more than twenty channels, and the number of available channels is bound to

increase as technology develops. One reaction to the availability of an

increasing number of channels has been the emergence of numerous variants of

the CIS strategy which, curiously, deliberately choose not to use all the

available electrode channels. These strategies, with names like n-of-m, ACE (“advanced

combinatorial encoder”), and SPEAK ( “spectral peak”),

use various forms of “dynamic peak picking” algorithms. Effectively, after

amplitude extraction, the device uses only the amplitude envelopes of a modest

number of frequency bands that happened to be associated with the largest

amplitudes, and switches the low-amplitude channels off. The rationale is to

increase the contrast between the peaks and troughs of the spectral envelopes

of the sound, which could help create, for example, a particularly salient

representation of the formants of a speech signal.

While all of these variants of CIS

still use asynchronous, temporally interleaved pulses to reduce channel

interactions, there are also algorithms being developed that deliberately

synchronize current pulses out of adjacent electrodes in an attempt to create

“virtual channels” by “current steering” (Wilson & Dorman, 2009). At its

simplest, by simultaneously activating two adjacent channels, the developers

are hoping to produce a peak of activity at the point between the two

electrodes. However, the potential to effectively “focus” electrical fields

onto points between the relatively modest number of contacts on a typical electrode

is, of course, limited, and such approaches have so far failed to produce

substantial improvements in speech recognition scores compared to older

techniques. For a more detailed description of the various speech-processing

algorithms in use today, the interested reader may turn to reviews by Wilson

and colleagues (2004; 2009), which also discuss the available data regarding

the relative effectiveness of these various algorithms.

One thing we can conclude from the

apparent proliferation of speech-processing algorithms in widespread clinical

use is that, at present, none of the available algorithms is clearly superior

to any of the others. As

8.5 Pitch and Music Perception Through Cochlear Implants

As we have just seen,

the majority of cochlear implant speech-processing strategies in use today rely

on pulsatile stimulation, where pulses are delivered

at a relatively high rate (1 kHz or more) to each stimulating electrode. The

pulse rate is the same on each electrode, and is constant, independent of the

input sound. The rationale behind this choice of pulse train carriers is to

deliver sufficiently well-resolved spectral detail to allow speech recognition

through a modest array of electrode contacts, which suffer from high levels of

electrical cross-talk. But when you cast your mind back to our discussions of

phase locking in chapter 2, and recall from chapter 3 that phase locking

provides valuable temporal cues to the periodicity, and hence the pitch, of a

complex sound, you may appreciate that the constant-rate current pulse carriers

used in many cochlear implant coding strategies are in some important respects

very unnatural. CIS or similar stimulation strategies provide the auditory

nerve fibers with very little information about the temporal fine structure of

the sound. Phase locking to the fixed-rate pulsatile

carrier itself would transmit no information about the stimulus at all. Some

fibers might conceivably be able to phase lock to some extent to the amplitude

envelopes in the various channels (rounded to the nearest multiple of the

carrier pulse rate), but the amplitude envelopes used in CIS to modulate the

pulse trains are low-pass filtered at a few hundred hertz to avoid a phenomenon

called “aliasing.” Consequently, no temporal cues to the fundamental frequency

of a complex sound above about 300 Hz survive after the sound has been processed

with CIS or a similar strategy. And to infer the fundamental frequency from the

harmonic structure of the sound would, as you may recall, require a very fine

spectral resolution, which even a healthy cochlea may struggle to achieve, and

which is certainly beyond what cochlear implants can deliver at present or in

the foreseeable future. With these facts in mind, you will not be surprised to

learn that the ability of implantees to distinguish

the pitches of different sounds tends to be very poor indeed, with many implantees struggling to discriminate even pitch intervals

as large as half an octave or greater (Sucher & McDermott,

2007).

Against this background, you may

wonder to what extent it even makes sense to speak about pitch perception at

all in the context of electrical hearing with cochlear implants. This is a

question worth considering further, and in its historical context (McKay,

2004). As you may recall from chapter 3, the American National Standards

Institute (1994) defines pitch as “that auditory attribute of sound according

to which sounds can be ordered on a scale from low to high.” As soon as

cochlear implants with multiple electrode sites became available, researchers

started delivering stimulus pulses to either an apical or a basal site, asking

the implantees which electrical stimulus “sounded

higher.” In such experiments, many implantees would

reliably rank more basal stimulation sites as “higher sounding” than apical

sites. These reported percepts were therefore in line with the normal cochlear tonotopic order. However, if, instead of delivering

isolated pulses to various points along the cochlea, one stimulates just a

single point on the cochlea with regular pulse trains and varies the pulse

rates over a range from 50 to 300 Hz, implantees will

also report higher pulse rates as “higher sounding,” even if the place of

stimulation has not changed (McKay, 2004; Moore and Carlyon, 2005; Shannon,

1983).

If you find these observations

hard to reconcile, then you are in good company. Moving the site of stimulation

toward a more basal location is a very different manipulation from increasing

the stimulus pulse rate. How can they both have the same effect, and lead to a

higher sounding percept? One very interesting experiment by Tong and colleagues

(1983) suggests how this conundrum might be solved: Fast pulse rates and apical

stimulation sites may both sound “high,” but the “direction” labeled as “up” is

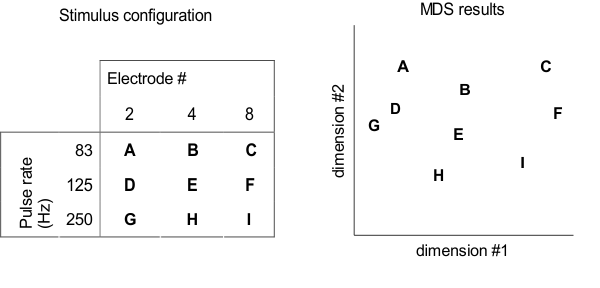

not the same in both cases. Tong et al. (1983) presented nine different

electrical pulse train stimuli to their implantee

subjects. The stimuli encompassed all combinations of three different pulse

rates and three different cochlear places, as shown in the left of figure

8.7. But instead of presenting the electrical stimuli in

pairs and asking “which one sounds higher,” the stimuli were presented in

triplets, and the subjects were asked “which two of the three stimuli sound

most alike.” By repeating this process many times with many different triplets

of sounds, it is possible to measure the “perceptual distance” or perceived

dissimilarity between any two of the sounds in the stimulus set. Tong and

colleagues then subjected these perceptual distance estimates to a multidimensional

scaling (MDS) analysis.

The details of MDS are somewhat

beyond the scope of this book, but let us try to give you a quick intuition of

the ideas behind it. Imagine you were asked to draw a map showing the locations

of three towns—A, B, and C—and you were told that the distance from A to C is

200 miles, while the distances from A to B and B to C are 100 miles each. In

that case, you could conclude that towns A, B, and C must lie on a single

straight line, with B between A and C. The map would therefore be “one-dimensional.”

But if the A-B and B-C distances turned out to be 150 miles each (in general,

if the sum of the A-B and B-C distances is larger than the AC distance), then

you can conclude that A, B, and C cannot lie on a single (one-dimensional)

straight line, but rather must be arranged in a triangle in a (two-dimensional)

plane. Using considerations of this sort, MDS measures whether it is possible

to “embed” a given number of points into a space of a low number of dimensions

without seriously distorting (“straining”) their pairwise

distances.

If cochlear place and pulse rate

both affected the same single perceptual variable, that is, “pitch,” then it

ought to be possible to arrange all the perceptual distances between the

stimuli used in the experiment by Tong et al. (1983) along a single, one-dimensional

“perceptual pitch axis.” However, the results of the MDS analysis showed this

not to be possible. Only a two-dimensional perceptual space (shown on the right

in figure 8.7) can accommodate the

observed pairwise perceptual distances between the

stimuli used by Tong and colleagues. The conclusion from this experiment is

clear: When asked to rank stimuli from “high” to “low,” implantees

might report both changes in the place of stimulation to more basal locations

and increases in the pulse rate as producing a “higher” sound, but there seem

to be two different directions in perceptual space along which a stimulus can

“become higher.”

Figure 8.7

Perceptual

multidimensional scaling (MDS) experiment by Tong and colleagues (1983). Cochlear implant users were asked

to rank the dissimilarity of nine different stimuli (A–I), which differed in

pulse rates and cochlear locations, as shown in the table on the left. MSD

analysis results of the perceptual dissimilarity (distance) ratings, shown on

the right, indicate that pulse rate and cochlear place change the implantee’s sound percept along two independent dimensions.

A number of authors writing on

cochlear implant research have taken to calling the perceptual dimension

associated with the locus of stimulation “place pitch,” and that which is

associated with the rate of stimulation “periodicity pitch.” Describing two

demonstrably independent (orthogonal) perceptual dimensions as two different

“varieties” of pitch seems to us an unfortunate choice. If we present normal

listeners with a range of artificial vowels, keep the fundamental frequency

constant but shift some of the formant frequencies upward to generate something

resembling a /u/ to /i/ transition, and then pressed

our listeners to tell us which of the two vowels sounded “higher,” most would

reply that the /i/, with its larger acoustic energy

content at higher formant frequencies, sounds “higher” than the /u/. If we then

asked the same listeners to compare a /u/ with a fundamental frequency of 440

Hz with another /u/ with a fundamental frequency of 263 Hz, the same listeners

would call the first one higher. But only in the second case, the “periodicity

pitch” case where the fundamental frequency changes from the note C4

to A4 while the formant spectrum remains constant, are we dealing

with “pitch” in the sense of the perceptual quality that we use to appreciate musical

melody. The “place pitch” phenomenon, which accompanies spectral envelope

changes rather than changes in fundamental frequency, is probably better

thought of as an aspect of timbre, rather than a type of pitch.

The use of the term “place pitch”

has nevertheless become quite widespread, and as long as this term is used

consistently and its meaning is clear, such usage, although in our opinion not

ideal, is nevertheless defensible. Perhaps more worrying is the fact that one

can still find articles in the cochlear implant literature that simply equate

the term “pitch” with place of cochlear stimulation, without any reference to

temporal coding or further qualification. Given the crucial role temporal

discharge patterns play in generating musical pitch, that

is simply wrong.

With the concepts of “place pitch”

and “periodicity pitch” now clear and fresh in our minds, let us return to the

question of why pitch perception is generally poor in cochlear implant

recipients, and what might be done to improve it. An inability to appreciate

musical melody is one of the most common complaints of implantees,

although their ability to appreciate musical rhythms is very good. (On the

book’s website you can find examples of noise vocoded

pieces of music, which may give you an impression of what music might sound

like through a cochlear implant <flag>). Furthermore, speakers of tonal languages,

such as Mandarin, find it harder to obtain good speech recognition results with

cochlear implants (Ciocca et al., 2002). However,

melody appreciation through electrical hearing is bound to stay poor unless the

technology evolves to deliver more detailed temporal fine structure information

about a sound’s periodicity. Indeed, a number of experimental speech processor

strategies are being developed and tested, which aim to boost temporal

information by increasing the signals’ depth of modulation, as well as

synchronizing stimulus pulses relative to the sound’s fundamental frequency

across all electrode channels (Vandali et al., 2005).

These do seem to produce a statistically significant but nevertheless modest

improvement over conventional speech processing algorithms.

What exactly needs to be done to

achieve good periodicity pitch coding in cochlear implants remains somewhat

uncertain. As we mentioned earlier, electrical stimulation of the cochlea with

regular pulse trains of increasing frequency from 50 to 300 Hz produces a

sensation of increasing pitch (McKay, 2004; Shannon, 1983), but unfortunately, increasing

the pulse rates beyond 500 Hz usually does not increase the perceived pitch

further (Moore & Carlyon, 2005). In contrast, as we saw in chapter 3, the

normal (“periodicity”) pitch range of healthy adults extends up to about 4 kHz.

Why is the limit of periodicity pitch that can be easily achieved with direct

electrical stimulation through cochlear implants so much lower than that

obtained with acoustic click-trains in the normal ear?

One possibility that was

considered, but discounted on the basis of psychoacoustical

evidence, is that the basal, and hence normally high-frequency, sites stimulated

by cochlear implants may simply not be as sensitive to temporal patterning in

the pitch range as their low frequency, apical neighbors (Carlyon & Deeks, 2002). One likely alternative explanation is that

electrical stimulation may produce an excessive level of synchronization of

activity in the auditory nerve, which prevents the transmission of temporal

fine structure at high rates. Recall that, in the normal ear, each inner hair

cell connects to about ten auditory nerve fibers, and hair cells sit so closely

packed that several hundred auditory nerve fibers would all effectively serve

more or less the same “frequency channel.” This group of several hundred fibers

operates according to the “volley principle,” that is, while they phase lock to

the acoustic signal, an individual auditory nerve fiber need not respond to

every period of the sound. If it skips the odd period, the periods it misses will

very likely be marked by the firing of some other nerve fiber that forms part

of the assembly. Being able to skip periods is important, because physiological

limitations such as refractoriness mean that no nerve fiber can ever fire

faster than 1,000 Hz, and few are able to maintain firing rates greater than a

few hundred hertz for prolonged periods. Consequently, the temporal encoding of

the periodicity of sounds with fundamental frequencies greater than a few

hundred hertz relies on effective operation of the volley principle, so that

nerve fibers can “take it in turns” to mark the individual periods. In other

words, while we want the nerve fibers to lock to the periodicity of the sound,

we do not want them to be synchronized to each other.

It is possible that the physiology

of the synapses that connect the nerve fibers to the inner hair cells may favor

such an asynchronous activation. In contrast, electrical current pulses from

extracellular electrodes that, relative to the spatial scale

of individual nerve fibers, are both very large and far away, can only

lead to a highly synchronized activation of the nerve fibers, and would make it

impossible for the auditory nerve to rely on the volley principle for the

encoding of high pulse rates. Recordings from the auditory nerve of implanted

animals certainly indicate highly precise time locking to every pulse in a

pulse-train, as well as an inability to lock to rates higher than a few hundred

hertz (Javel et al., 1987), and recordings in the

central nervous system in response to electrical stimulation of the cochlea

also provide indirect evidence for a hypersynchronization

of auditory nerve fibers (Hartmann & Kral, 2004).

If this hypersynchronization

is indeed the key factor limiting pitch perception through cochlear implants,

then technical solutions to this problem may be a long way off. There have been

attempts to induce a degree of “stochastic resonance” to desynchronize the

activity of the auditory nerve fibers by introducing small amounts of noise or

jitter into the electrode signals, but these have not yet produced significant

improvements in pitch perception through cochlear implants (Chen, Ishihara,

& Zeng, 2005). Perhaps the contacts of electrodes

designed for insertion into the scala tympani are

simply too few, too large, and too far away from the spiral ganglion to allow

the activation of auditory nerve fibers in a manner that favors the

stimulus-locked yet desynchronized activity necessary to transmit a lot of

temporal fine structure information at high rates. In that case, “proper” pitch

perception through cochlear implants may require a radical redesign of the

implanted electrodes, so that many hundreds, rather than just a few dozen,

distinct electrical channels can be delivered in a manner that allows very

small groups of auditory nerve fibers to be targeted individually and activated

independently of their neighbors.

You may have got the impression

that using cochlear implants to restore hearing, or, in the case of the

congenitally deaf, to introduce it for the first time, involves starting with a

blank canvas in which the patient has no auditory sensation. This is not always

the case, however, as some residual hearing, particularly at low frequencies (1

kHz or less), may still be present. Given the importance of those low frequencies

in pitch perception, modified cochlear electrode arrays are now being used that

focus on stimulating the basal, dead high-frequency region of the cochlea while

leaving hearing in the intact low-frequency region to be boosted by

conventional hearing aids.

8.6 Spatial Hearing with Cochlear Implants

Until recently,

cochlear implantation was reserved solely for patients with severe hearing loss

in both ears, and these patients would typically receive an implant in one ear

only. The decision to implant only one ear was motivated partly from

considerations of added cost and surgical risk, but also from doubts about the

added benefit a second device might bring, and the consideration that, at a

time when implant technology was developing rapidly, it might be worth

“reserving” the second ear for later implantation with a more advanced device.

While unilateral implantation remains the norm at the time of this writing,

attitudes are changing rapidly in favor of bilateral implantation. Indeed, in

2008, the British National Institute for Health and Clinical Excellence (NICE)

changed its guidelines, and now recommends that profoundly deaf patients should

routinely be considered for bilateral implantation.

You may recall from chapter 5 that

binaural cues play a key role in our ability to localize sounds in space and to

pick out sounds of interest among other competing sounds. Any spatial hearing

that humans are capable of with just one ear stems from a combination of

head-shadow effects and their ability to exploit rather subtle changes at the

high end of the spectrum, where direction-dependent filtering by the external

ear may create spectral-shape localization cues. Contrast this with the

situation for most cochlear implant patients who will receive monaural

stimulation via a microphone located above and behind the ear, which, for the

reasons discussed earlier, conveys very limited spectral detail because

typically not much more than half a dozen or so effectively separated frequency

channels are available at any time. It is therefore unsurprising that, with

just a single implant, patients are effectively unable to localize sound

sources or to understand speech in noisy environments. Communicating in a busy

restaurant or bar is therefore particularly difficult for individuals with

unilateral cochlear implants.

Their quality of life can however

sometimes be improved considerably, by providing cochlear implants in both

ears, and patients with bilateral cochlear implants show substantially improved

sound localization compared to their performance with either implant alone (Litovsky et al., 2006; van Hoesel

& Tyler, 2003). Sensitivity to ILDs can be as

good as that seen in listeners with normal hearing, although ITD thresholds

tend to be much worse, most likely because of the lack of temporal fine

structure information provided by the implants (van Hoesel

& Tyler, 2003). Speech-in-noise perception can also improve following

bilateral cochlear implantation. In principle, such benefits may accrue solely from

“better ear” effects: The ear on the far side of a noise source will be in a

sound shadow produced by the head, which can improve the signal-to-noise ratio if

the sounds of interest originates from a different direction

than the distracting noise. A listener with two ears may be able to benefit

from the better ear effect simply by attending to the more favorably positioned

ear (hence the name), but a listener with only one functional ear may have to turn

her head in awkward and uncomfortable ways if the sound source directions of

the target and noise sources are unfavorable. Furthermore, Long and colleagues

(2006) showed that patients with bilateral cochlear implants can experience

binaural unmasking on the basis of envelope-based ITDs, suggesting that they

should be able to use their binaural hearing to improve speech perception in

noisy environments beyond what is achievable by the better ear effect alone.

8.6 Brain Plasticity and Cochlear Implantation

You may recall from

chapter 7 that the auditory system is highly susceptible to long-term changes

in input. A very important issue for the successful outcome of cochlear

implantation is therefore the age at which hearing is lost and the duration of

deafness prior to implantation. We know, for example, that early sensorineural hearing loss can cause neurons in the central

auditory system to degenerate and die (Shepherd & Hardie,

2000), and can also alter the synaptic and membrane properties of those neurons

that survive (Kotak, Breithaupt,

& Sanes, 2007). Neural pathways can also be

rewired, especially if hearing is lost on one side only (Hartley & King,

2010). Since bilateral cochlear implantees often

receive their implants at different times, their auditory systems will

potentially have to endure a period of complete deafness followed by deafness

in one ear.

A number of studies highlight the

importance of early implantation for maximizing the benefits of electrical

hearing. The latencies of cortical auditory evoked potentials reach normal

values only if children are implanted before a certain age (Sharma & Dorman,

2006), while studies in deaf cats fitted with a cochlear implant have also

shown that cortical response plasticity declines with age (Kral

& Tillein, 2006). Another complication is that

the absence of sound-evoked inputs, particularly during early development,

results in the auditory cortex being taken over by other sensory modalities (Doucet et al., 2006; Lee et al., 2007). Now cross-modal

reorganization can be very useful, making it possible, for example, for blind

patients to localize sounds more accurately (Röder et

al., 1999), or for deaf people to make better use of visual speech. On the

other hand, if auditory areas of the brain in the deaf are taken over by other

sensory inputs, the capacity of those areas to process restored auditory inputs

provided by cochlear implants may be limited.

We have discussed several examples

in this book where auditory and visual information is fused in ways that can

have profound effects on perception. A good example of this is the McGurk effect, in which viewing someone articulating one

speech sound while listening to another sound can change what we hear (see chapter

4 and accompanying video clip on the book’s web site). If congenitally deaf

children are fitted with cochlear implants within the first two and a half

years of life, they experience the McGurk effect.

However, after this age, auditory and visual speech cues can no longer be fused

(Schorr et al., 2005), further emphasizing the importance

of implantation within a sensitive period of development. Interestingly,

patients who received cochlear implants following postlingual

deafness—who presumably benefitted from multisensory experience early in

life—are better than listeners with normal hearing at fusing visual and

auditory signals, which improves their understaning

of speech in situations where both sets of cues are present (Rouger et al., 2007).

On the basis of the highly dynamic

way in which the brain processes auditory information, it seems certain that

the capacity of patients to interpret the distorted signals provided by

cochlear implants will be enhanced by experience and training strategies that

encourage their use in specific auditory tasks. Indeed, it is almost certainly

only because the brain possesses such remarkable adaptive capabilities that

cochlear implants work at all.