7 Development,

Learning, and Plasticity

- 7.1 When Does Hearing Start?

- 7.2 Hearing Capabilities Improve after Birth

- 7.3 The Importance of Early Experience: Speech and Music

- 7.4 Maturation of Auditory Circuits in the Brain

- 7.5 Plasticity in the Adult Brain

We have so far

considered the basis by which the auditory system can detect, localize, and

identify the myriad sounds that we might encounter. But how does our perception

of the acoustic environment arise during development? Are we born with these

abilities or do they emerge gradually during childhood? It turns out that much

of the development of the auditory system takes places before birth, enabling

many species, including humans, to respond to sound as soon as they are born.

Nonetheless, the different parts of the ear and the central auditory pathways

continue to mature for some time after that. This involves a lot of remodeling

in the brain, with many neurons failing to survive until adulthood and others

undergoing changes in the number and type of connections they form with other

neurons. Not surprisingly, these wiring modifications can result in

developmental changes in the auditory sensitivity of the neurons. As a

consequence, auditory perceptual abilities mature over different timescales, in

some cases not reaching the levels typically seen in adults until several years

after birth.

A very important factor in the

development of any sensory system is that the anatomical and functional

organization of the brain regions involved is shaped by experience during

so-called sensitive or critical periods of early postnatal life. This

plasticity helps to optimize those circuits to an individual’s sensory environment.

But this also means that abnormal experience—such as a loss of hearing in

childhood—can have a profound effect on the manner in which neurons respond to

different sounds, and therefore on how we perceive them.

Although sensitive periods of

development have been described for many species and for many aspects of

auditory function, including the emergence of linguistic and musical abilities,

we must remember that learning is a lifelong process. Indeed, extensive

plasticity is seen in the adult brain, too, which plays a vital function in

enabling humans and animals to interact effectively with their acoustic

environment and provides the basis on which learning can improve perceptual

abilities.

7.1 When Does Hearing Start?

The development of the

auditory system is a complex, multistage process that begins in early embryonic

life. The embryo comprises three layers, which interact to produce the various

tissues of the body. One of these layers, the ectoderm, gives rise to both

neural tissue and skin. The initial stage in this process involves the

formation of the otic placode,

a thickening of the ectoderm in the region of the developing hindbrain. As a

result of signals provided by the neural tube, from which the brain and spinal

cord are derived, and by the mesoderm, the otic placode is induced to invaginate

and fold up into a structure called the otocyst, from

which the cochlea and otic ganglion cells—the future

auditory nerve—are formed. Interestingly, the external ear and the middle ear

have different embryological origins from that of the inner ear. As a

consequence, congenital abnormalities can occur independently in each of these

structures.

The neurons that will become part

of the central auditory pathway are produced within the ventricular zone of the

embryo’s neural tube, from where they migrate to their final destination in the

brain. Studies in animals have shown that the first auditory neurons to be

generated give rise to the cochlear nucleus, superior olivary

complex, and medial geniculate nucleus, with the

production of neurons that form the inferior colliculus

and auditory cortex beginning slightly later. In humans, all the subcortical auditory structures can be recognized by the eighth

fetal week. The cortical plate, the first sign of the future cerebral cortex,

also emerges at this time, although the temporal lobe becomes apparent as a

distinct structure only in the twenty-seventh week of gestation (Moore & Linthicum,

2009).

To serve their purpose, the newly

generated neurons must make specific synaptic connections with other neurons.

Consequently, as they are migrating, the neurons start to send out axons that

are guided toward their targets by a variety of molecular guidance cues those

structures produce. These molecules are detected by receptors on the exploring

growth cones that form the tips of the growing axons, while other molecules

ensure that axons make contact with the appropriate region of the target

neurons. Robust synaptic connections can be established at an early stage—by

the fourteenth week of gestation in the case of the innervation

of hair cells by the spiral ganglion cells. On the other hand, another seven

weeks elapse before axons from the thalamus start to

make connections with the cortical plate.

At this stage of development, the

axons lack their insulating sheaths of myelin, which are required for the rapid

and reliable conduction of action potentials that is so important in the adult

auditory system. In humans, myelination of the

auditory nerve and the major brainstem pathways begins at the twenty-sixth week

of gestation, and it is at around this age that the first responses to sound

can be measured. One way of showing this is to measure event-related potentials

from the scalp of premature infants born soon after this age. But even within

the womb it is possible to demonstrate that the fetus can hear by measuring the

unborn baby’s movements or changes in heart rate that occur in response to vibroacoustic stimulation applied to the mother’s abdomen.

Such measurements have confirmed that hearing onset occurs at around the end of

the second trimester.

External sounds will, of course,

be muffled by the mother’s abdominal wall and masked by noises produced by her

internal organs. It is therefore perhaps not immediately clear what types of

sound would actually reach the fetus. Attempts to record responses from the

inner ear of fetal sheep, however, suggest that low-frequency speech could be

audible to human infants (Smith et al., 2003), and there is some evidence that,

toward the end of pregnancy, the human fetus not only responds to but can even

discriminate between different speech sounds (Shahidullah

& Hepper, 1994).

7.2 Hearing Capabilities Improve after Birth

Because of the

extensive prenatal development of the auditory system, human infants are born

with a quite sophisticated capacity to make sense of their auditory world. They

can readily distinguish between different phonemes, and are sensitive to the

pitch and rhythm of their mother’s voice. Within a few days of birth, babies

show a preference for their mother’s voice over that of another infant’s

mother, presumably as a result of their prenatal experience (DeCasper & Fifer, 1980). Perhaps more surprisingly,

various aspects of music perception can be demonstrated early in infancy. These

include an ability to distinguish different scales and chords and a preference

for consonant or pleasant-sounding intervals, such as the perfect fifth, over

dissonant intervals (Trehub, 2003), as well as

sensitivity to the beat of a rhythmic sound pattern (Winkler et al., 2009).

Whether these early perceptual

abilities are unique to human infants or specifically related to language and

music is still an open question. Mark Hauser at

It would be wrong to conclude from

this, however, that human infants can hear the world around them in the same

way that adults do. Almost all auditory perceptual abilities improve gradually

after birth, and the age at which adult performance is reached varies greatly

with the task. For example, sounds have to be played at a greater intensity to

evoke a response from an infant, and, particularly for low-frequency tones, it

can take as long as a decade until children possess the same low detection

thresholds seen in adults. The capacity to detect a change in the frequency of

two sequentially played tones also continues to improve over several years,

although frequency resolution—the detection of a tone of one frequency in the

presence of masking energy at other frequencies—seems to mature earlier.

Another aspect of hearing that

matures over a protracted period of postnatal development is sound

localization. While parents will readily attest to the fact that newborn

infants can turn toward their voices, the accuracy of these orienting responses

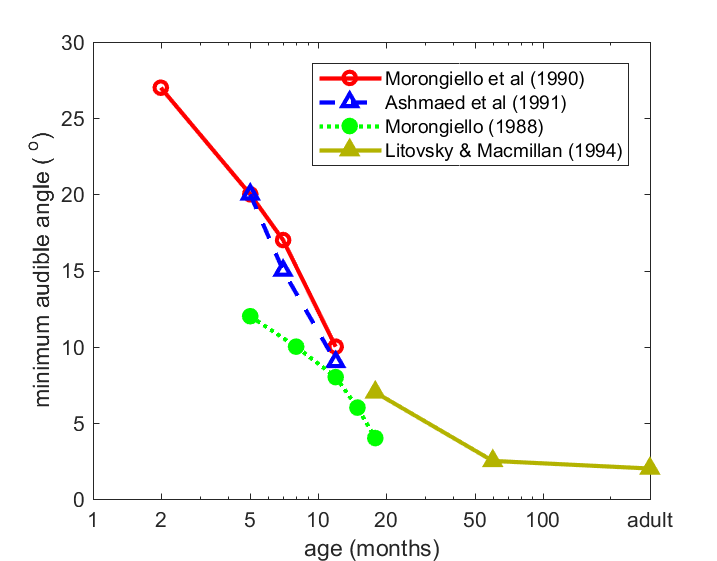

increases with age. Figure 7.1

shows that the minimum audible angle—the smallest

detectable change in sound source location—takes about 5 years to reach adult

values. As we saw in chapter 5, having two ears also helps in detecting

particular sounds against a noisy background. The measure of this ability, the

binaural masking level difference, takes at least 5 years and possibly much

longer to mature (Hall III, Buss, & Grose, 2007).

This is also the case for the precedence effect (Litovsky,

1997), indicating that the capacity to perceive sounds

in the reverberant environments we encounter in our everyday lives emerges over

a particularly long period.

Figure 7.1

Minimum audible

angles, a measure the smallest change in the direction of a sound source that

can be reliably discriminated, decrease with age in humans.

From

Highly relevant to the perception

of speech and music is the development of auditory temporal processing.

Estimates of the minimum time period within which different acoustic events can

be distinguished have been obtained using a variety of methods. These include

the detection of amplitude and frequency modulation, gap detection, and nonsimultaneous masking paradigms. Although quite wide

variations have been found in the age at which adult values are reached with

the precise task and type of sound used, it is clear that temporal resolution

also takes a long time to reach maturity. For example, “backward masking,”

which measures the ability of listeners to detect a tone that is followed

immediately by a noise, has been reported to reach adult levels of performance

as late as 15 years of age.

To make sense of all this, we need

to take several factors into account. First, there is, of course, the

developmental status of the auditory system. Although the ear and auditory

pathways are sufficiently far advanced in their development to be able to

respond to sound well before birth in humans, important and extensive changes

continue to take place for several years into postnatal life. Second, nonsensory or cognitive factors will contribute to the

performance measured in infants. These factors include attention, motivation,

and memory, and they often present particular challenges when trying to assess

auditory function in the very young.

We can account for the maturation

of certain hearing abilities without having to worry about what might be

happening in the brain at the time. This is because changes in auditory

performance can be attributed to the postnatal development of the ear itself.

For instance, the elevated thresholds and relatively flat audiogram seen in

infancy are almost certainly due to the immature conductive properties of the external

ear and the middle ear found at that age. As these structures grow, the

resonant frequencies of the external ear decrease in value and the acoustic

power transfer of the middle ear improves. In both cases, it takes several

years for adult values to be reached, a timeframe consistent with age-related

improvements in hearing sensitivity.

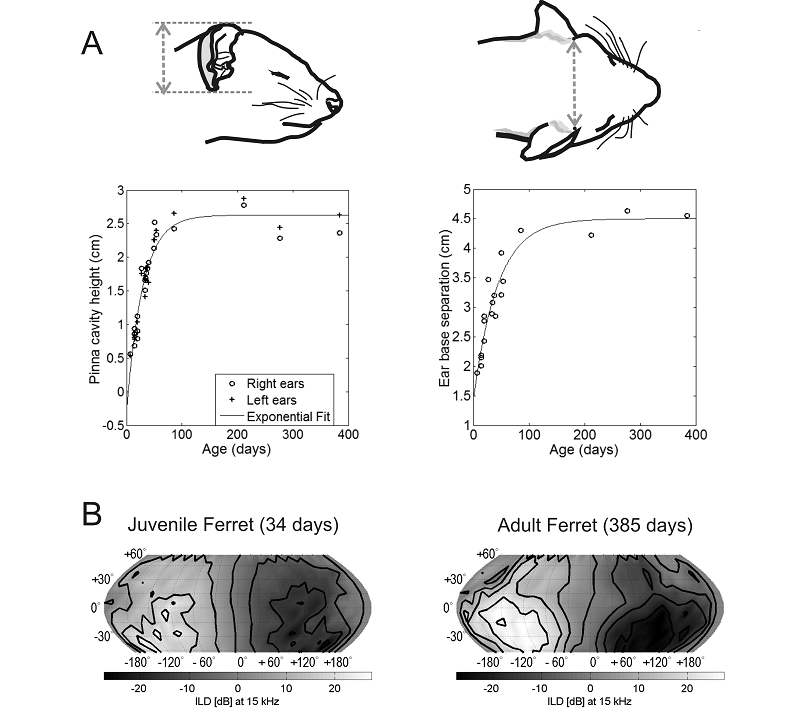

Growth of the external ears and

the head also has considerable implications for sound localization. As we saw

in chapter 5, the auditory system determines the direction of a sound source

from a combination of monaural and binaural spatial cues. The values of those

cues will change as the external ears grow and the distance between them

increases (figure 7.2). As we shall see later,

when we look at the maturation of the neural circuits that process spatial

information, age-related differences in the cue values can account for the way

in which the spatial receptive fields of auditory neurons change during

development. In turn, it is likely that this will contribution to the gradual

emergence of a child’s localization abilities.

Figure 7.2

Growth of the head and

external ears changes the acoustic cue values corresponding to each direction

in space. (A) Age-related changes in the height of the external ear and in the

distance between the ears of the ferret, a species commonly used for studying auditory

development. These dimensions mature by around 4 months of age. (B) Variation

in interaural level differences (ILDs) for a 15-kHz

tone as a function of azimuth and elevation in a 34-day-old juvenile ferret

(left) and in an adult animal at 385 days of age (right). The range of ILDs is larger and their spatial pattern is somewhat

different in the older animal. Based on Schnupp,

Booth, and King (2003).

The range of audible frequencies

appears to change in early life as a result of developmental modifications in

the tonotopic organization of the cochlea. You should

now be very familiar with the notion that the hair cells near the base of the

cochlea are most sensitive to high-frequency sounds, whereas those located

nearer its apex are tuned to progressively lower frequencies. Although the

basal end of the cochlea matures first, studies in mammals and chicks have

shown that this region initially responds to lower sound frequencies than it

does in adults. This is followed by an increase in the sound frequencies to

which each region of the cochlea is most responsive, leading to an upward

expansion in the range of audible sound frequencies. Such changes have not been

described in humans, but it is possible that they take place before birth.

While the maturation of certain

aspects of auditory perception is constrained by the development of the ear,

significant changes also take place postnatally in

the central auditory system. An increase in myelination

of the auditory pathways results in a progressive reduction in the latency of

the evoked potentials measured at the scalp in response to sound stimulation.

At the level of the human brainstem, these changes are thought to be complete

within the first 2 years of life. However, Nina Kraus and colleagues have shown

that the brainstem responses evoked by speech sounds in 3- to 4-year-old

children are delayed and less synchronous than those recorded in older

children, whereas this difference across age is not observed with simpler

sounds (Johnson et al., 2008). But it is the neural circuits at higher levels

of the auditory system that mature most slowly, with sound-evoked cortical

potentials taking around 12 years to resemble those seen in adults (Wunderlich & Cone-Wesson, 2006).

7.3 The Importance of Early Experience: Speech and Music

Although it remains

difficult to determine how important the acoustic environment of the fetus is

for the prenatal development of hearing, there is no doubt that the postnatal

maturation of the central auditory pathways is heavily influenced by sensory

experience. Because this process is so protracted, there is ample opportunity

for the development of our perceptual faculties to be influenced by experience

of the sounds we encounter during infancy. As we shall see in the following

section, this also means that reduced auditory inputs, which can result, for

example, from early hearing loss, and even information provided by the other

senses can have a profound impact on the development of the central auditory

system.

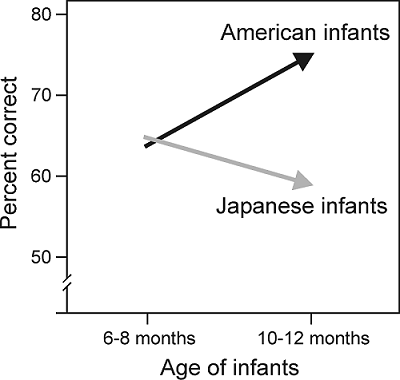

The importance of experience in

shaping the maturing auditory system is illustrated very clearly by the

acquisition of language during early childhood. Infants are initially able to

distinguish speech sounds in any language. But as they learn from experience,

this languagewide capacity quickly narrows. Indeed,

during the first year of life, their ability to perceive phonetic contrasts in

their mother tongue improves, while they lose their sensitivity to certain

sound distinctions that occur only in foreign languages. You may remember from

chapter 4 that this is nicely illustrated by the classic example of adult

Japanese speakers, who struggle to distinguish the phonetic units “r” from “l,”

even though, at 7 months of age, Japanese infants are as adept at doing so as

native English speakers (figure 7.3).

In Japanese, these consonants fall within a single perceptual category, so

Japanese children “unlearn” the ability to distinguish them. This process of

becoming more sensitive to acoustic distinctions at phoneme boundaries of one’s

mother tongue, while becoming less sensitive to distinctions away from them,

has been found to begin as early as 6 months of age for vowels and by 10 months

for consonants (Kuhl & Rivera-Gaxiola,

2008). Perceptual narrowing based on a child’s experience during infancy is not

restricted to spoken language. Over the same time period, infants also become

more sensitive to the correspondence between speech sounds and the talker’s

face in their own language, and less so for non-native language (Pons et al.,

2009).

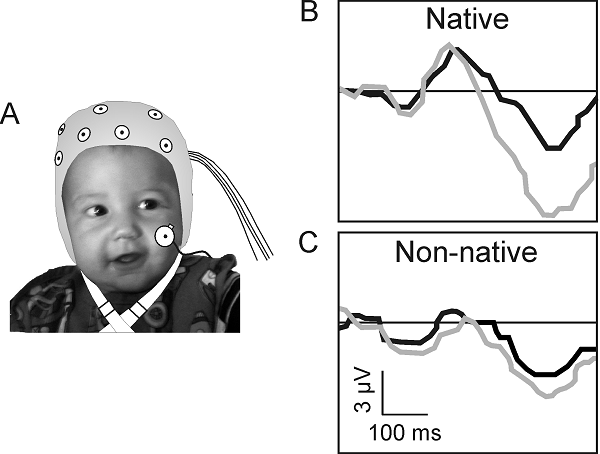

Figure 7.3

The

effects of age on speech perception performance in a cross-language study of

the perception of American English /r–l/ sounds by American and Japanese

infants.

From Kuhl et al. (2003).

These changes in speech perception

during the first year of life are driven, at least in part, by the statistical

distribution of speech sounds in the language to which the infant is exposed.

Thus, familiarizing infants with artificial speech sounds in which this

distribution has been manipulated experimentally alters their subsequent

ability to distinguish some of those sounds (Maye, Werker, & Gerken, 2002). But

social interactions also seem to play a role. Kuhl, Tsao, and Liu (2003) showed that 9-month-old American

infants readily learn phonemes and words in Mandarin Chinese, but only if they

were able to interact with a live Chinese speaker. By contrast, no learning

occurred if the same sounds were delivered by television or audiotape.

Not surprisingly, as a child’s

perceptual abilities become increasing focused on processing the language(s)

experienced during early life, the capacity to learn a new language declines.

In addition to the loss in the ability to distinguish phonemes in other

languages during the first year of life, other aspects of speech acquisition,

including the syntactic and semantic aspects of language, also appear to be

developmentally regulated (Ruben, 1997). The critical period of development

during which language can be acquired with little effort lasts for about 7

years. New language learning then becomes more difficult, despite the fact that

other cognitive abilities improve with age.

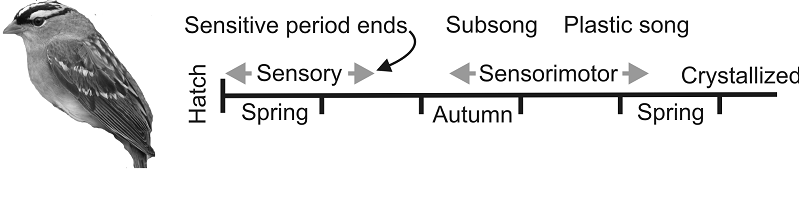

Sensitive periods have also been

characterized for other aspects of auditory development. Perhaps the most

relevant to language acquisition in humans is vocal learning in songbirds (figure

7.4). Young birds learn their songs by listening to adults

during an initial sensitive period of development, the duration of which varies

from one species to another and with acoustic experience and the level of

hormones such as testosterone. After this purely sensory phase of learning, the

birds start to make their own highly variable vocal attempts, producing what is

known as “subsong,” the equivalent of babbling in

babies. They then use auditory feedback during a sensorimotor

phase of learning to refine their vocalizations until a stable adult song is

crystallized (Brainard & Doupe,

2002).

Figure 7.4

Birdsong

learning stages.

In seasonal species, such as the white-crowned sparrow, the sensory and sensorimotor phases of learning are separated in time. The

initial vocalizations (subsong) produced by young

birds are variable and generic across individuals. Subsong

gradually evolves into “plastic song,” which, although still highly variable,

begins to incorporate some recognizable elements of tutor songs. Plastic song

is progressively refined until the bird crystallizes its stable adult song. Other

songbirds show different time courses of learning.

From Brainard and Doupe (2002).

Thus, both human speech

development and birdsong learning rely on the individual being able to hear the

voices of others, as illustrated by Peter Marler’s

observation that, like humans, some songbirds possess regional dialects (Marler & Tamura, 1962). The importance of hearing the

tutor song during a sensitive period of development has been demonstrated by

raising songbirds with unrelated adults of the same species; as they mature,

these birds start to imitate the songs produced by the tutor birds. On the other

hand, birds raised in acoustic isolation—so that they are prevented from

hearing the song of conspecific adults—produce abnormal vocalizations. This is

also the case if songbirds are deafened before they have the opportunity to

practice their vocalizations, even if they have previously been exposed to

tutor songs; this highlights the importance of being able to hear their own

voices as they learn to sing. In a similar vein, profound hearing loss has a

detrimental effect on speech acquisition in children.

A related area where experience

plays a key role is in the development of music perception. We have already

pointed out that human infants are born with a remarkably advanced sensitivity

to different aspects of music. As with their universal capacity to distinguish

phonemes, infants initially respond in a similar way to the music of any

culture. Their perceptual abilities change with experience, however, and become

increasingly focused on the style of music to which they have been exposed. For

example, at 6 months of age, infants are sensitive to rhythmic variations in

the music of different cultures, whereas 12-month-olds show a culture-specific

bias (Hannon & Trehub, 2005). The perception of

rhythm in foreign music can nonetheless be improved at 12 months by brief

exposure to an unfamiliar style of music, whereas this is not the case in

adults.

Findings such as these again point

to the existence of a sensitive period of development during which perceptual

abilities can be refined by experience. As with the maturation of speech

perception, passive exposure to the sounds of a particular culture probably

leads to changes in neural sensitivity to the structure of music. But we also have

to consider the role played by musical training. In chapter 3, we introduced

the concept of absolute pitch—the ability to identify the pitch of a sound in

the absence of a reference pitch. It seems likely that some form of musical

training during childhood is a requirement for developing absolute pitch, and the

likelihood of having this ability increases if that training starts earlier.

This cannot, however, be the only explanation, as not all trained musicians

possess absolute pitch. In addition, genetic factors play a role in determining

whether or not absolute pitch can be acquired.

There is considerable interest in

being able to measure what actually goes on in the brain as auditory perceptual

abilities change during development and with experience. A number of noninvasive

brain imaging and electrophysiological recording methods are available to do

this in humans. Using these approaches, it has been shown that although

language functions are lateralized at birth, the regions of the cerebral cortex

involved are less specialized and the responses recorded from them are much

slower in infants than they are in adults (Friederici,

2006; Kuhl & Rivera-Gaxiola,

2008). Event-related potentials (ERPs) are particularly

suitable for studying time-locked responses to speech in young children. ERP

measurements suggest that by 7.5 months of age, the brain is more sensitive to

phonetic contrasts in the child’s native language than in a non-native language

(figure 7.5; Kuhl

& Rivera-Gaxiola, 2008). This is line with

behavioral studies of phonetic learning. Intriguingly, the differences seen at

this age in the ERP responses to native and non-native contrasts seem to

provide an indicator of the rate at which language is subsequently acquired.

Neural correlates of word learning can be observed toward the end of the first

year of life, whereas violations of syntactic word order result in ERP

differences at around 30 months after birth.

Figure 7.5

Neural correlates of

speech perception in infancy. (A) Human infant aged 7.5 months wearing an ERP electrocap. (B) ERP waveforms recorded at this age from one

sensor location in response to a native (English) and non-native (Mandarin

Chinese) phonetic contrast. The black waveforms show the response to a standard

stimulus, whereas the gray waveforms show the response to the deviant stimulus.

The difference in amplitude between the standard and deviant waveforms is

larger in the case of the native English contrast, implying better

discrimination than for the non-native speech sounds.

Adapted from Kuhl and Rivera-Gaxiola (2008).

Some remarkable examples of brain

plasticity have been described in trained musicians. Of course, speech and

music both involve production (or playing, in the case of a musical instrument)

as much as listening, so it is hardly surprising that motor as well as auditory

regions of the brain can be influenced by musical training and experience. Neuroimaging studies have shown that musical training can

produce structural and functional changes in the brain areas that are activated

during auditory processing or when playing an instrument, particularly if

training begins in early childhood. These changes most commonly take the form

of an enlargement of the brain areas in question and enhanced musically related

activity in them. One study observed structural brain plasticity in motor and

auditory cortical areas of 6-year-old children who received 15 months of

keyboard lessons, which was accompanied by improvements in musically relevant

skills (Hyde et al., 2009). Because no anatomical differences were found before

the lessons started between these children and an age-matched control group, it

appears that musical training can have a profound effect on the development of

these brain areas. In fact, plasticity is not restricted to the cerebral cortex,

as functional differences are also found in the auditory brainstem of trained

musicians (Kraus et al., 2009).

Imaging studies have also provided

some intriguing insights into the basis of musical disorders. We probably all

know someone who is tone deaf or unable to sing in tune. This condition may

arise as a result of a reduction in the size of the arcuate

fasciculus, a fiber tract that connects the temporal and frontal lobes of the

cerebral cortex (Loui, Alsop, & Schlaug, 2009). Consequently, tone deafness, which is found

in about 10% of the population, is likely to reflect reduced links between the

brain regions involved in the processing of sound, including speech and music,

and those responsible for vocal production.

7.4 Maturation of Auditory Circuits in the Brain

To track the changes

that take place in the human brain during development and learning, we have to

rely on noninvasive measures of brain anatomy and function. As in the mature

brain, however, these methods tell us little about what is happening at the

level of individual nerve cells and circuits. This requires a different

approach, involving the use of more invasive experimental techniques in

animals. In this section, we look at some of the cellular changes that take

place during development within the central auditory pathway, and examine how

they are affected by changes in sensory inputs.

The connections between the spiral

ganglion cells and their targets in the cochlea and the brainstem provide the

basis for the tonotopic representation of sound

frequency within the central auditory system. These connections therefore have

to be organized very precisely, and are thought to be guided into place at a

very early stage of development by chemical signals released by the target

structures (Fekete & Campero,

2007). The action potentials that are subsequently generated by the axons are

not responsible just for conveying signals from the cochlea to the brain. They

also influence the maturation of both the synaptic endings of the axons,

including the large endbulbs of Held, which, as we

saw in chapter 5, are important for transmitting temporal information with high

fidelity, and the neurons in the cochlear nucleus (Rubel

& Fritzsch, 2002). This is initially achieved

through action potentials that are generated spontaneously, in the absence of

sound, which are critical for the survival of the cochlear nucleus neurons

until the stage at which hearing begins.

The earliest sound-evoked responses

are immature in many ways. During the course of postnatal development,

improvements are seen in the thresholds of auditory neurons, in their capacity

to follow rapidly changing stimuli, and in phase locking, while maximum firing

rates increase and response latencies decrease. Some of these response

properties mature before others, and the age at which they do so varies at

different levels of the auditory pathway (Hartley & King, 2010). Because it

is the last structure to mature, a number of studies have focused on the

development of the auditory cortex. Changes occur in the frequency selectivity

of cortical neurons during infancy, a process that is greatly influenced by the

acoustic environment. For example, Zhang, Bao, and Merzenich (2001) showed that exposing young rats to

repeated tones of one frequency led to a distortion of the tonotopic

map, with a greater proportion of the auditory cortex now devoted to that

frequency than to other values. This does not necessarily mean that the animals

now hear better at these frequencies though; they actually end up being less

able to discriminate sound frequencies within the enlarged representation, but

better at doing so for those frequencies where the tonotopic

map is compressed (Han et al., 2007). This capacity for cortical reorganization

is restricted to a sensitive period of development, and different sensitive

periods have been identified for neuronal sensitivity to different sound

features, which coincide with the ages at which those response properties

mature (Insanally et al., 2009). Linking studies such

as these, in which animals are raised in highly artificial and structured

environments, to the development of auditory perception in children is

obviously not straightforward. It is clear, however, that the coding of

different sounds by cortical neurons is very dependent on experience during

infancy, and this is highly likely to influence the emergence of perceptual

skills.

While the aforementioned studies

emphasize the developmental plasticity of the cortex, subcortical

circuits can also undergo substantial refinements under the influence of

cochlear activity. These changes can have a considerable impact on the coding

properties—particularly those relating to sound source localization—of auditory

neurons. Neural sensitivity to ILDs and ITDs has been

observed at the youngest ages examined in the LSO (Sanes

& Rubel, 1988) and MSO (Seidl

& Grothe, 2005), respectively. The inhibitory

projection from the MNTB to the LSO, which gives rise to neural sensitivity to ILDs, undergoes an activity-dependent reorganization during

the normal course of development (Kandler, 2004).

Many of the initial connections die off and those that remain, rather

bizarrely, switch from being excitatory to inhibitory before undergoing further

structural remodeling. In chapter 5, we saw that precisely timed inhibitory

inputs to the gerbil MSO neurons can adjust their ITD sensitivity, so that the

steepest—and therefore most informative—regions of the tuning functions lie

across the range of values that can occur naturally given the size of the head.

Seidl and Grothe (2005)

showed that in very young gerbils these glycinergic

synapses are initially distributed uniformly along each of the two dendrites of

the MSO neurons. A little later in development, they disappear, leaving

inhibitory inputs only on or close to the soma of the neurons, which is the

pattern seen in adult gerbils (figure 7.6).

This anatomical rearrangement alters the ITD sensitivity of the neurons, but

occurs only if the animals receive appropriate auditory experience. If they are

denied access to binaural localization cues, the infant distribution persists

and the ITD functions fail to mature properly.

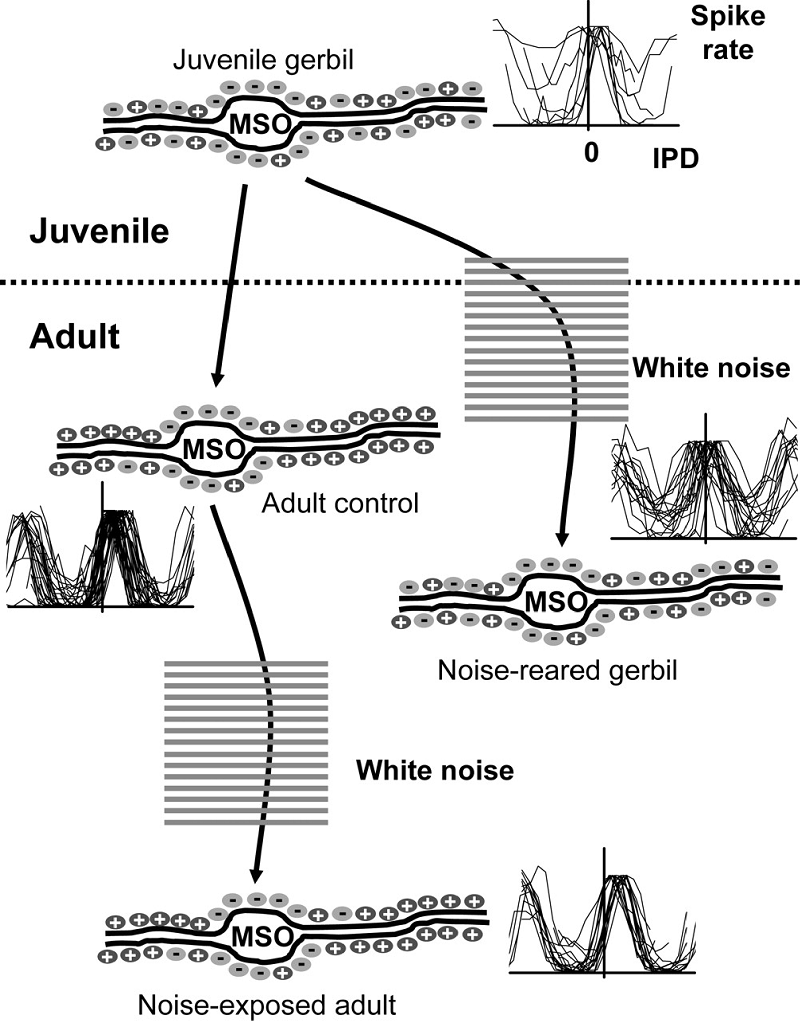

Figure 7.6

Maturation of

brainstem circuits for processing interaural time differences. In juvenile

gerbils, at around the time of hearing onset, excitatory and inhibitory inputs

are distributed on the dendrites and somata of

neurons in the medial superior olive (MSO). At this age, neurons prefer

interaural phase differences (IPD) around 0. In adult gerbils, glycinergic inhibition is restricted to the cell somata and is absent from the dendrites and IPD response

curves are shifted away from 0, so that the maximal slope lies within the

physiological range. This developmental refinement depends on acoustic

experience, as demonstrated by the effects of raising gerbils in omnidirectional white noise, which preserves the juvenile

state. Exposing adults to noise has no effect on either the distribution of glycinergic synapses on the MSO neurons or their IPD

functions.

Used with permission from Seidl and Grothe (2005).

Changes in binaural cue

sensitivity would be expected to shape the development of the spatial receptive

fields of auditory neurons and the localization behaviors to which they

contribute. Recordings from the superior colliculus (Campbell

et al., 2008) and auditory cortex (Mrsic-Flogel et

al., 2003) have shown that the spatial tuning of the neurons is indeed much

broader in young ferrets than it is in adult animals. But it turns out that

this is due primarily to the changes in the localization cue values that take

place as the head and ears grow. Thus, presenting infant animals with stimuli

through virtual adult ears led to an immediate sharpening in the spatial

receptive fields. This demonstrates that both peripheral and central auditory

factors have to be taken into account when assessing how adult processing

abilities are reached.

Nevertheless, it is essential that

the neural circuits involved in sound localization are shaped by experience. As

we have seen, the values of the auditory localization cues depend on the size

and shape of the head and external ears, and consequently will vary from one individual

to another. Each of us therefore has to learn to localize with our own ears.

That this is indeed the case has been illustrated by showing that humans

localize virtual space stimuli more accurately when the stimuli are generated

from acoustical measurements made from their own ears than from the ears of

other individuals (Wenzel et al., 1993).

Plasticity of auditory spatial

processing has been demonstrated by manipulating the sensory cues available.

For example, inducing a reversible conductive hearing loss by plugging one ear

will alter the auditory cue values corresponding to different directions in

space. Consequently, both sound localization accuracy and the spatial tuning of

auditory neurons will be disrupted. However, if barn owls (Knudsen, Esterly, & Knudsen, 1984) or ferrets (King, Parsons,

& Moore, 2000) are raised with a plug inserted in one ear, they learn to

localize sounds accurately (figure 7.7A).

Corresponding changes are seen in the optic tectum

(Knudsen, 1985) and superior colliculus (King et al.,

2000), where, despite the abnormal cues, a map of auditory space emerges in

register with the visual map (figure 7.7).

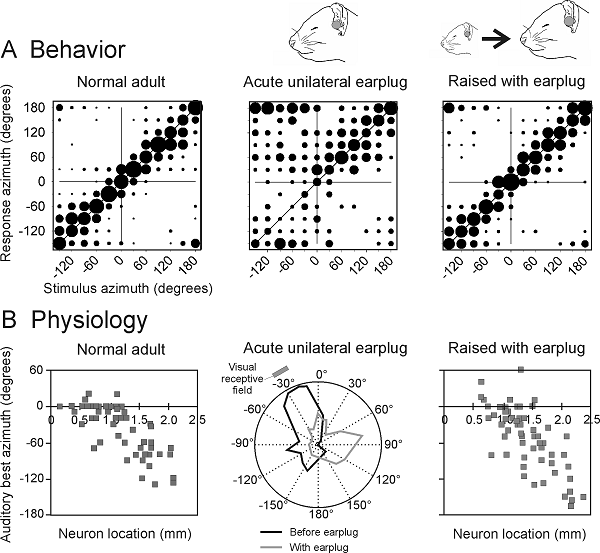

Figure 7.7

Auditory experience

shapes the maturation of sound localization behavior and the map of auditory

space in the superior colliculus. (A)

Stimulus-response plots showing the combined data of three normally reared

ferrets (normal adult ferrets), another three animals just after inserting an

earplug into the left ear (adult left earplug), and three ferrets that had been

raised and tested with the left ear occluded with a plug that produced 30- to 40-dB

attenuation (reared with left earplug). These plots illustrate the distribution

of approach-to-target responses (ordinate) as a function of stimulus location

(abscissa). The stimuli were bursts of broadband noise. The size of the dots

indicates, for a given speaker angle, the proportion of responses made to

different response locations. Occluding one ear disrupts sound localization

accuracy, but adaptive changes take place during development that enable the

juvenile plugged ferrets to localize sound almost as accurately as the

controls. (B) The map of auditory space in the ferret SC, illustrated by

plotting the best azimuth of neurons versus their location within the nucleus.

Occluding one ear disrupts this spatial tuning, but, as with the behavioral

data, near-normal spatial tuning is present in ferrets that were raised with

one ear occluded.

At the end of chapter 5, we

discussed the influence that vision can have over judgments of sound source

location in humans. A similar effect is also seen during development if visual

and auditory cues provide spatially conflicting information. This has been

demonstrated by providing barn owls with spectacles containing prisms that

shift the visual world representation relative to the head. A compensatory

shift in the accuracy of sound-evoked orienting responses and in the auditory

spatial receptive fields of neurons in the optic tectum

occurs in response to the altered visual inputs, which is brought about by a

rewiring of connections in the midbrain (figure 7.8; Knudsen,

1999). This experiment was possible because barn owls have a very limited

capacity to move their eyes. In mammals, compensatory eye movements would

likely confound the results of using prisms. Nevertheless, other approaches

suggest that vision also plays a guiding role in aligning the different sensory

representations in the mammalian superior colliculus

(King et al., 1988). Studies in barn owls have shown that experience-driven

plasticity is most pronounced during development, although the sensitive period

for visual refinement of both the auditory space map and auditory localization

behavior can be extended under certain conditions (Brainard

& Knudsen, 1998).

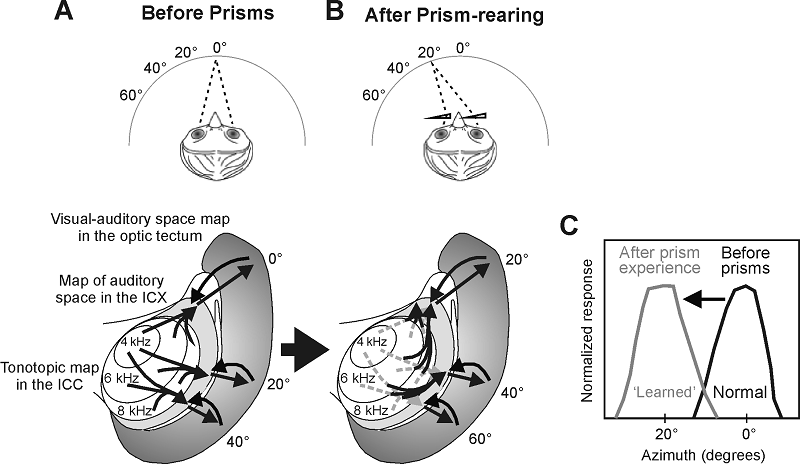

Figure 7.8

Visual experience

shapes the map of auditory space in the midbrain of the barn owl. (A) The owl's

inferior colliculus (ICX) contains a map of auditory

space, which is derived from topographic projections that combine spatial

information across different frequency channels in the central nucleus of the

inferior colliculus (ICC). The ICX map of auditory

space is then conveyed to the optic tectum, where it

is superimposed on a map of visual space. (B) From around 40 days after

hatching, the auditory space maps in both the optic tectum

and the ICX are refined by visual experience. This has been demonstrated by

chronically shifting the visual field in young owls by mounting prisms in front

of their eyes. The same visual stimulus now activates a different set of neurons

in the optic tectum. The auditory space maps in the

ICX and tectum gradually shift by an equivalent

amount in prism-reared owls, thereby reestablishing the alignment with the

optically displaced visual map in the tectum. This

involves growth of novel projections from the ICC to the ICX (unbroken lines);

the original connections remain in place but are suppressed (broken lines).

Finally, we need to consider how

complex vocalizations are learned. This has so far been quite difficult to

study in nonhuman species, but studies of birdsong learning have provided some

intriguing insights into the neural processing of complex signals that evolve

over time, and there is every reason to suppose that similar principles will

apply to the development of sensitivity to species-specific vocalizations in

mammals. In section 7.3, we described how vocal learning in songbirds is guided

by performance feedback during a sensitive period of development. Several

forebrain areas are thought to be involved in the recognition of conspecific

song. In juvenile zebra finches, neurons in field L, the avian equivalent of

the primary auditory cortex, are less acoustically responsive and less

selective for natural calls over statistically equivalent synthetic sounds than

they are in adult birds (Amin, Doupe,

& Theunissen, 2007). Neuronal selectivity for

conspecific songs emerges at the same age at which the birds express a

behavioral preference for individual songs (Clayton, 1988), implicating the

development of these response properties in the maturation of song recognition.

The auditory forebrain areas project to a cluster of structures, collectively

known as the “song system,” which have been shown to be involved in vocal

learning. Recording studies have shown that certain neurons of the song system

prefer the bird’s own song or the tutor’s song over other complex sounds,

including songs from other species (Margoliash,

1983), and that these preferences emerge following exposure to the animal’s own

vocal attempts (Solis & Doupe, 1999).

7.5 Plasticity in the Adult Brain

We have seen that many

different aspects of auditory processing and perception are shaped by

experience during sensitive periods of development. While the length of those

periods can vary with sound property, brain level, and species and may be

extended by hormonal or other factors, it is generally accepted that the

potential for plasticity declines with age. That would seem to make sense,

since more stability may be desirable and even necessary in the adult brain to

achieve the efficiency and reliability of a mature nervous system. But it turns

out that the fully mature auditory system shows considerable adaptive

plasticity that can be demonstrated over multiple timescales.

Numerous examples have been

described where the history of stimulation can determine the responsiveness or

even the tuning properties of auditory neurons. For example, if the same

stimulus, say a tone of a particular frequency, is presented repeatedly, neurons

normally show a decrease in response strength. However, the response can be

restored if a different frequency is presented occasionally (Ulanovsky, Las, & Nelken,

2004). This phenomenon is known as stimulus-specific adaptation, and

facilitates the detection of rare events and sudden changes in the acoustic

environment (see chapter 6). On the other hand, the sensitivity of the neurons

can change so that the most frequently occurring stimuli are represented more

precisely. A nice example of this “adaptive coding” was described by Dean and

colleagues (2005), who showed that the relationship between the firing rate of

inferior colliculus neurons and sound level can

change to improve the coding of those levels that occur with the highest

probability. One important consequence of this is that a greater range of sound

levels can be encoded, even though individual neurons have a relatively limited

dynamic range.

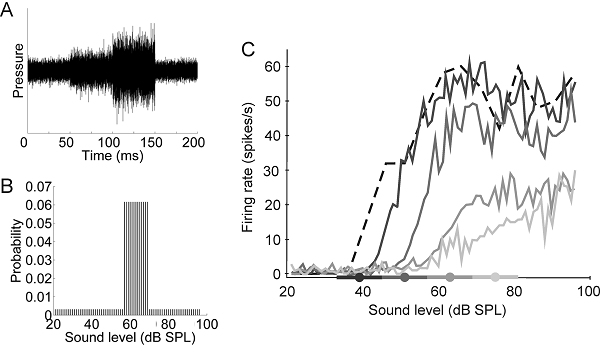

Figure 7.9

Adjustments in responses

of inferior collicular neurons to the mean ongoing

sound level. Broadband stimuli on which the sound level was

varied over a wide range, but with a high-probability region centered on

different values. (A) Distribution of sound levels in the stimulus with

a high-probability region centered on 63 dB SPL. (B) Rate-level functions for

one neuron for four different sound level distributions, as indicated by the

filled circles and thick lines on the x axis. Note that the functions shift as

the range of levels over which the high-probability region is presented

changes.

From Dean, Harper,

and McAlpine (2005).

These changes occur in passive

hearing conditions and are therefore caused solely by adjustments in the

statistics of the stimulus input. If a particular tone frequency is given

behavioral significance by following it with an aversive stimulus, such as a

mild electric shock, the responses of cortical neurons to that frequency can be

enhanced (Weinberger, 2004). Responses to identical stimuli can even change

over the course of a few minutes in different ways in a task-dependent fashion

(Fritz, Elhilali, & Shamma,

2005), implying that this plasticity may reflect differences in the meaning of

the sound according to the context in which it is presented.

Over a longer time course, the tonotopic organization of the primary auditory cortex of

adult animals can change following peripheral injury. If the hair cells in a

particular region of the cochlea are damaged as a result of exposure to a

high-intensity sound or some other form of acoustic trauma, the area of the

auditory cortex in which the damaged part of the cochlea would normally be

represented becomes occupied by an expanded representation of neighboring sound

frequencies (Robertson & Irvine, 1989). A similar reorganization of the

cortex has been found to accompany improvements in behavioral performance that

occur as a result of perceptual learning. Recanzone,

Schreiner, and Merzenich (1993) trained monkeys on a

frequency discrimination task and reported that the area of cortex representing

the tones used for training increased in parallel with improvements in

discrimination performance. Since then, a number of other changes in cortical

response properties have been reported as animals learn to respond to

particular sounds. This does not necessarily involve a change in the firing

rates or tuning properties of the neurons, as temporal firing patterns can be

altered as well (Bao et al., 2004; Schnupp et al., 2006).

There is a key difference between

the cortical changes observed following training in adulthood and those

resulting from passive exposure to particular sounds during sensitive periods

of development, in that the sounds used for training need to be behaviorally

relevant to the animals. This was demonstrated by Polley,

Steinberg, and Merzenich (2006), who trained rats

with the same set of sounds on either a frequency or a level recognition task.

An enlarged representation of the target frequencies was found in the cortex of

animals that learned the frequency recognition task, whereas the representation

of sound level in these animals was unaltered. By contrast, training to respond

to a particular sound level increased the proportion of neurons tuned to that

level without affecting their tonotopic organization.

These findings suggest that attention or other cognitive factors may dictate

how auditory cortical coding changes according to the behavioral significance

of the stimuli, which is thought to be signaled by the release in the auditory

cortex of neuromodulators such as acetylcholine.

Indeed, simply coupling the release of acetylcholine with sound stimulus in

untrained animals is sufficient to induce a massive reorganization of the adult

auditory cortex (Kilgard, 2003).

Training can also dramatically

improve the auditory perceptual skills of humans, and the extent to which

learning generalizes to other stimuli or tasks can provide useful insights into

the underlying neural substrates (Wright & Zhang, 2009). As in the animal

studies, perceptual learning in humans can be accompanied by enhanced responses

in the auditory cortex (Alain et al., 2007; van Wassenhove

& Nagarajan, 2007). It is not, however,

necessarily the case that perceptual learning directly reflects changes in

brain areas that deal with the representation of sound attribute in question.

Thus, a substantial part of the improvement in pitch discrimination during

perceptual learning tasks is nonspecific—for example, subjects playing a

computer game while hearing pure tones (but not explicitly attending to these

sounds) improve in pitch discrimination. The same was true even for subjects

who played a computer game without hearing any pure tones (Amitay,

Irwin, & Moore, 2006)! Such improvement must be due to general factors

governing task performance rather than to specific changes in the properties of

neurons in the auditory system.

In addition to improving the

performance of subjects with normal hearing, training can promote the capacity

of the adapt brain to adjust to altered inputs. This has been most clearly

demonstrated in the context of sound localization. In the previous section, we

saw that the neural circuits responsible for spatial hearing are shaped by

experience during the phase of development when the localization cues are

changing in value as a result of head growth. Perhaps surprisingly, the mature

brain can also relearn to localize sound in the presence of substantially

altered auditory spatial cues. Hofman et al. (1998)

showed that adult humans can learn to use altered spectral localization cues.

To do this, they inserted a mold into each external ear, effectively changing

its shape and therefore the spectral-shape cues corresponding to different

sound directions. This led to an immediate disruption in vertical localization,

with performance gradually recovering over the next few weeks (figure

7.10).

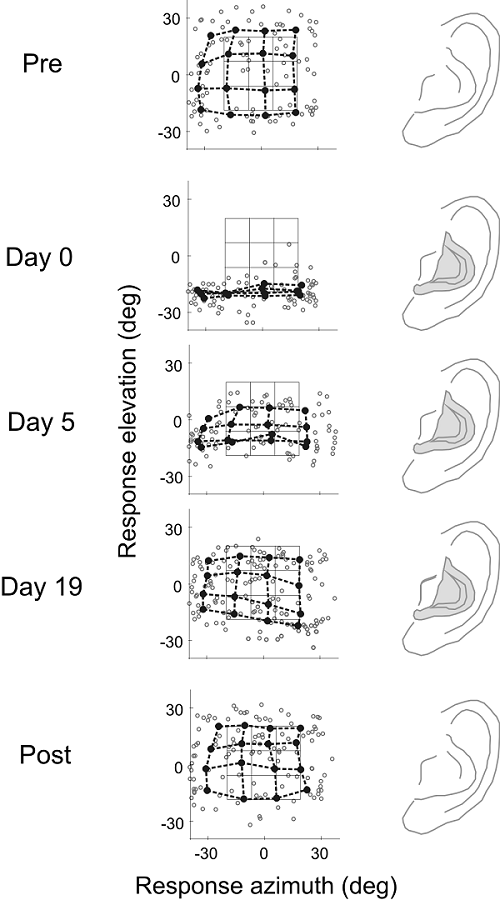

Figure 7.10

Learning

to localize sounds with new ears in adult humans. In this study, the accuracy of

sound localization was assessed by measuring eye movements made by human

listeners toward the location of broadband sound sources. The stimuli were

presented at random locations encompassed by the black grid in each of the

middle panels. The end points of all the saccadic eye movements made by one

subject are indicated by the red dots. The blue dots and connecting lines

represent the average saccade vectors for targets located within neighboring

sectors of the stimulus grid. The overlap between the response and target

matrices under normal listening conditions (precontrol)

shows that saccadic eye movements are quite accurate in azimuth and elevation.

Molds were then fitted to each external ear, which altered the spatial pattern

of spectral cues. Measurements made immediately following application of the molds

(day 0) showed that elevation judgments were severely disrupted, whereas

azimuth localization within this limited region of space were unaffected. The

molds were left in place for several weeks, and, during this period,

localization performance gradually improved before stabilizing at a level close

to that observed before the molds were applied. No aftereffect was observed

after the molds were removed (postcontrol), as the

subjects were able to localize sounds as accurately as they did in the precontrol condition.

From Hofman, Van Riswick, and Van Opstal (1998).

Because it relies much more on

binaural cues, localization in the horizontal plane becomes inaccurate if an

earplug is inserted in one ear. Once again, however, the mature auditory system

can learn to accommodate the altered cues. This has been shown in both humans (Kumpik, Kacelnik, & King,

2010) and ferrets (Kacelnik et al., 2006), and seems

to involve a reweighting away from the abnormal binaural cues so that greater

use is made of spectral-shape information. This rapid recovery of sound

localization accuracy occurs only if appropriate behavioral training is

provided (figure 7.11). It is also critically

dependent on the descending pathways from the auditory cortex to the midbrain (Bajo et al., 2010), which can modulate the responses of the

neurons found there in a variety of ways (Suga & Ma,

2003). This highlights a very important, and often ignored, aspect of auditory

processing, namely, that information passes down as well as up the pathway. As

a consequence of this, plasticity in subcortical as

well as cortical circuits is likely to be involved in the way humans and other

species interact with their acoustic environments.

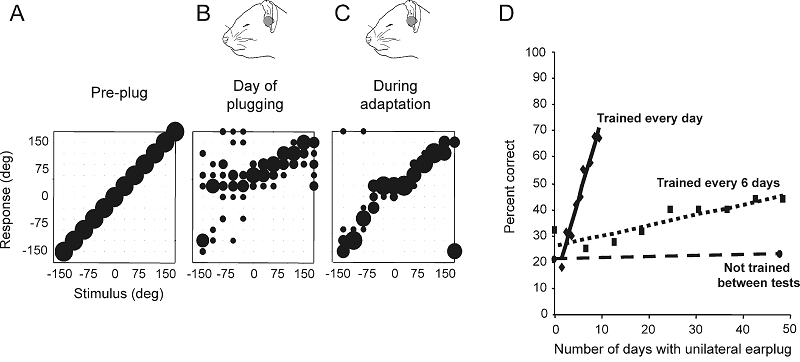

Figure 7.11

Plasticity

of spatial hearing in adult ferrets. (A–C) Stimulus-response plots showing the

distribution of responses (ordinate) made by a ferret as a function of stimulus

location in the horizontal plane (abscissa). The size of the dots indicates,

for a given speaker angle, the proportion of responses made to different

locations. Correct responses are those that fall on the diagonal line, whereas

all other responses represent errors of different magnitude. Prior to occlusion

of the left ear, the animal achieved 100% correct scores at all stimulus

directions (A), but performed poorly, particularly on the side of the earplug,

when the left ear was occluded (B). Further testing with the earplug still in

place, however, led to a recovery in localization accuracy (C). (D) Mean change

in performance (averaged across all speaker locations) over time in three

groups of ferrets with unilateral earplugs. No change was found in trained

ferrets (n = 3) that received an

earplug for 6 weeks, but were tested only at the start and end of this period (circles

and dashed regression line). Two other groups of animals received an equivalent

amount of training while the left ear was occluded. Although the earplug was in

place for less time, a much faster rate of improvement was observed in the

animals that received daily training (n

= 3; diamonds and solid regression line) compared to those that were tested

every 6 days (n = 6; squares and

dotted regression line).

From Kacelnik

et al. (2006)

We have seen in this chapter that

the auditory system possesses a truly remarkable and often underestimated

capacity to adapt to the sensory world. This is particularly the case in the

developing brain, when newly formed neural circuits are refined by experience

during specific and often quite narrow time windows. But the capacity to learn

and adapt to the constantly changing demands of the environment is a lifelong

process, which requires that processing in certain neural circuits can be

modified in response to both short-term and long-term changes in peripheral

inputs. The value of this plasticity is clear: Without it, it would not be

possible to customize the brain to the acoustical cues that underlie our

capacity to localize sound or for the processing of native language. But the

plasticity of the central auditory system comes at a potential cost, as this

means that a loss of hearing, particularly during development, can induce a

rewiring of connections and alterations in the activity of neurons, which could

give rise to conditions such as tinnitus, in which phantom sounds are

experienced in the absence of acoustic stimulation (Eggermont,

2008). At the same time, experience-dependent learning enhances the capacity of

the auditory system to accommodate the changes in input associated with hearing

loss and its restoration, a topic that we shall return to in the next chapter.